5.27.2. OpenStack Neutron L3 HA Test Plan¶

5.27.2.1. Test Plan¶

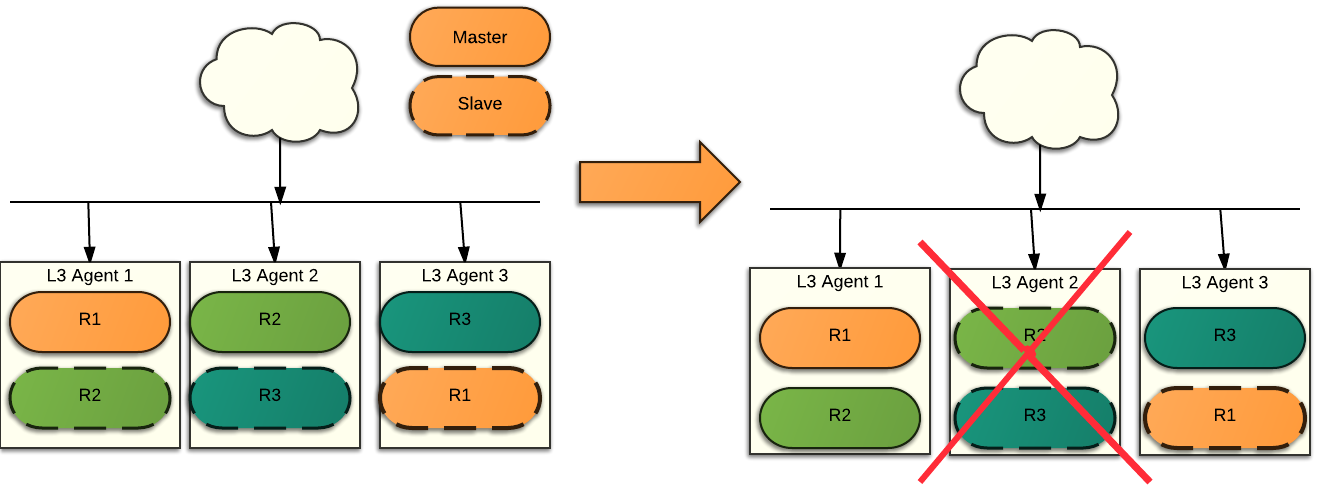

The purpose of this section is to describe scenarios for testing L3 HA. The most important aspect is the number of packets that will be lost during restart of the L3 agent or controller as a whole. The second aspect is the number of routers that can move from one agent to another without it falling into unmanaged state.

5.27.2.1.1. Test Environment¶

5.27.2.1.1.1. Preparation¶

This test plan is performed against existing OpenStack cloud.

5.27.2.1.1.2. Environment description¶

The environment description includes hardware specification of servers, network parameters, operation system and OpenStack deployment characteristics.

5.27.2.1.1.2.1. Hardware¶

This section contains list of all types of hardware nodes.

| Parameter | Value | Comments |

| model | e.g. Supermicro X9SRD-F | |

| CPU | e.g. 6 x Intel(R) Xeon(R) CPU E5-2620 v2 @ 2.10GHz | |

| role | e.g. compute or network |

5.27.2.1.1.2.2. Network¶

This section contains list of interfaces and network parameters. For complicated cases this section may include topology diagram and switch parameters.

| Parameter | Value | Comments |

| network role | e.g. provider or public | |

| card model | e.g. Intel | |

| driver | e.g. ixgbe | |

| speed | e.g. 10G or 1G | |

| MTU | e.g. 9000 | |

| offloading modes | e.g. default |

5.27.2.1.1.2.3. Software¶

This section describes installed software.

| Parameter | Value | Comments |

| OS | e.g. Ubuntu 14.04.3 | |

| OpenStack | e.g. Liberty | |

| Hypervisor | e.g. KVM | |

| Neutron plugin | e.g. ML2 + OVS | |

| L2 segmentation | e.g. VLAN or VxLAN or GRE | |

| virtual routers | HA |

5.27.2.1.2. Test Case 1: Comparative analysis of metrics with and without L3 agents restart¶

5.27.2.1.2.1. Description¶

Shaker is able to deploy OpenStack instances and networks in different topologies. For L3 HA, the most important scenarios are those that check connection between VMs in different networks (L3 east-west) and connection via floating ip (L3 north-south).

The following tests should be executed:

- OpenStack L3 East-West

- This scenario launches pairs of VMs in different networks connected to one router (L3 east-west)

- OpenStack L3 East-West Performance

- This scenario launches 1 pair of VMs in different networks connected to one router (L3 east-west). VMs are hosted on different compute nodes.

- OpenStack L3 North-South

- This scenario launches pairs of VMs on different compute nodes. VMs are in the different networks connected via different routers, master accesses slave by floating ip.

- OpenStack L3 North-South UDP

- OpenStack L3 North-South Performance

- OpenStack L3 North-South Dense

- This scenario launches pairs of VMs on one compute node. VMs are in the different networks connected via different routers, master accesses slave by floating ip.

For scenarios 1,2,3 and 6, results were also collected for L3 agent restart with L3 HA option disabled and standard router rescheduling enabled.

While running shaker tests, scripts restart.sh and restart_not_ha.sh were executed.

5.27.2.1.2.2. List of performance metrics¶

| Priority | Value | Measurement Units | Description |

|---|---|---|---|

| 1 | Latency | ms | The network latency |

| 1 | TCP bandwidth | Mbits/s | TCP network bandwidth |

| 2 | UDP bandwidth | packets per sec | Number of UDP packets of 32 bytes size |

| 2 | TCP retransmits | packets per sec | Number of retransmitted TCP packets |

5.27.2.1.3. Test Case 2: Rally tests execution¶

5.27.2.1.3.1. Description¶

Rally allows to check the ability of OpenStack to perform simple operations like create-delete, create-update, etc on scale.

L3 HA has a restriction of 255 routers per HA network per tenant. At this moment we do not have the ability to create new HA network per tenant if the number of VIPs exceed this limit. Based on this, for some tests, the number of tenants was increased (NeutronNetworks.create_and_list_router). The most important results are provided by test_create_delete_routers test, as it allows to catch possible race conditions during creation/deletion of HA routers, HA networks and HA interfaces. There are already several known bugs related to this which have been fixed in upstream. To find out more possible issues test_create_delete_routers has been run multiple times with different concurrency.

5.27.2.1.3.2. List of performance metrics¶

| Priority | Measurement Units | Description |

|---|---|---|

| 1 | Number of failed tests | Number of tests that failed during Rally tests execution |

| 2 | Concurrency | Number of tests that executed in parallel |

5.27.2.1.4. Test Case 3: Manual destruction test: Ping to external network from VM during reset of primary(non-primary) controller¶

5.27.2.1.4.1. Description¶

Scenario steps:

- create router

neutron router-create routerHA --ha True

- set gateway for external network and add interface

neutron router-gateway-set routerHA <ext_net_id>neutron router-interface-add routerHA <private_subnet_id>

- boot an instance in private net

nova boot --image <image_id> --flavor <flavor_id> --nic net_id=<private_net_id> vm1

- Login to VM using ssh or VNC console

- Start ping 8.8.8.8 and check that packets are not lost

- Check which agent is active with

neutron l3-agent-list-hosting-router <router_id>

- Restart node on which l3-agent is active

sudo shutdown -r noworsudo reboot

- Wait until another agent becomes active and restarted node recover

neutron l3-agent-list-hosting-router <router_id>

- Stop ping and check the number of packets that was lost.

- Increase number of routers and repeat steps 5-10

5.27.2.1.4.2. List of performance metrics¶

| Priority | Measurement Units | Description |

|---|---|---|

| 1 | Number of loss packets | Number of packets that was lost when L3 agent was banned |

| 2 | Number of routers | Number of existing router of the environment |

5.27.2.1.5. Test Case 4: Manual destruction test: Ping from one VM to another VM in different network during ban L3 agent¶

5.27.2.1.5.1. Description¶

Scenario steps:

- create router

neutron router-create routerHA--ha True

- add interface for two internal networks

router-interface-add routerHA <private_subnet1_id>router-interface-add routerHA <private_subnet2_id>

- boot an instance in private net1 and net2

nova boot --image <image_id> --flavor <flavor_id> --nic net_id=<private_net_id> vm1

- Login into VM1 using ssh or VNC console

- Start ping vm2_ip and check that packets are not lost

- Check which agent is active with

neutron l3-agent-list-hosting-router <router_id>

- ban active l3 agent run:

pcs resource ban p_neutron-l3-agent node-<id>

- Wait until another agent become active in neutron l3-agent-list-hosting-router <router_id>

- Clear banned agent

pcs resource clear p_neutron-l3-agent node-<id>

- Stop ping and check the number of packets that was lost.

- Increase number of routers and repeat steps 5-10

5.27.2.1.5.2. List of performance metrics¶

| Priority | Measurement Units | Description |

|---|---|---|

| 1 | Number of loss packets | Number of packets that was lost during restart of the node |

| 2 | Number of routers | Number of existing router of the environment |

5.27.2.1.6. Test Case 5: Manual destruction test: Iperf UPD testing between VMs in different networks ban L3 agent¶

5.27.2.1.6.1. Description¶

Scenario steps:

- Create vms.

- Login to VM1 using ssh or VNC console and run

iperf -s -u

- Login to VM2 using ssh or VNC console and run

iperf -c vm1_ip -p 5001 -t 60 -i 10 --bandwidth 30M --len 64 -u

- Check that loss is less than 1%

- Check which agent is active with

neutron l3-agent-list-hosting-router <router_id>

- Run command from step 3 again

- ban active l3 agent run:

pcs resource ban p_neutron-l3-agent node-<id>

- Check the results of iperf command and clear banned L3 agent.

pcs resource clear p_neutron-l3-agent node-<id>

- Increase number of routers and repeat steps 3-8

5.27.2.1.6.2. List of performance metrics¶

| Priority | Value | Measurement Units | Description |

|---|---|---|---|

| 1 | UDP bandwidth | % | Loss of UDP packets of 64 bytes size |

5.27.2.2. Reports¶

- Test plan execution reports: