Magnum User Guide¶

This guide is intended for users who use Magnum to deploy and manage clusters of hosts for a Container Orchestration Engine. It describes the infrastructure that Magnum creates and how to work with them.

Section 1-3 describe Magnum itself, including an overview, the CLI and Horizon interface. Section 4-9 describe the Container Orchestration Engine (COE) supported along with a guide on how to select one that best meets your needs and how to develop a driver for a new COE. Section 10-15 describe the low level OpenStack infrastructure that is created and managed by Magnum to support the COE’s.

Overview¶

Magnum is an OpenStack API service developed by the OpenStack Containers Team making container orchestration engines (COE) such as Docker Swarm, Kubernetes and Apache Mesos available as first class resources in OpenStack.

Magnum uses Heat to orchestrate an OS image which contains Docker and COE and runs that image in either virtual machines or bare metal in a cluster configuration.

Magnum offers complete life-cycle management of COEs in an OpenStack environment, integrated with other OpenStack services for a seamless experience for OpenStack users who wish to run containers in an OpenStack environment.

Following are few salient features of Magnum:

Standard API based complete life-cycle management for Container Clusters

Multi-tenancy for container clusters

Choice of COE: Kubernetes, Swarm, Mesos, DC/OS

Choice of container cluster deployment model: VM or Bare-metal

Keystone-based multi-tenant security and auth management

Neutron based multi-tenant network control and isolation

Cinder based volume service for containers

Integrated with OpenStack: SSO experience for cloud users

Secure container cluster access (TLS enabled)

More details: Magnum Project Wiki

ClusterTemplate¶

A ClusterTemplate (previously known as BayModel) is a collection of parameters to describe how a cluster can be constructed. Some parameters are relevant to the infrastructure of the cluster, while others are for the particular COE. In a typical workflow, a user would create a ClusterTemplate, then create one or more clusters using the ClusterTemplate. A cloud provider can also define a number of ClusterTemplates and provide them to the users. A ClusterTemplate cannot be updated or deleted if a cluster using this ClusterTemplate still exists.

The definition and usage of the parameters of a ClusterTemplate are as follows. They are loosely grouped as: mandatory, infrastructure, COE specific.

- <name>

Name of the ClusterTemplate to create. The name does not have to be unique. If multiple ClusterTemplates have the same name, you will need to use the UUID to select the ClusterTemplate when creating a cluster or updating, deleting a ClusterTemplate. If a name is not specified, a random name will be generated using a string and a number, for example “pi-13-model”.

- –coe <coe>

Specify the Container Orchestration Engine to use. Supported COE’s include ‘kubernetes’, ‘swarm’, ‘mesos’. If your environment has additional cluster drivers installed, refer to the cluster driver documentation for the new COE names. This is a mandatory parameter and there is no default value.

- –image <image>

The name or UUID of the base image in Glance to boot the servers for the cluster. The image must have the attribute ‘os_distro’ defined as appropriate for the cluster driver. For the currently supported images, the os_distro names are:

COE

os_distro

Kubernetes

fedora-atomic, coreos

Swarm

fedora-atomic

Mesos

ubuntu

This is a mandatory parameter and there is no default value. Note that the os_distro attribute is case sensitive.

- –keypair <keypair>

The name of the SSH keypair to configure in the cluster servers for ssh access. You will need the key to be able to ssh to the servers in the cluster. The login name is specific to the cluster driver. If keypair is not provided in template it will be required at Cluster create. This value will be overridden by any keypair value that is provided during Cluster create.

- –external-network <external-network>

The name or network ID of a Neutron network to provide connectivity to the external internet for the cluster. This network must be an external network, i.e. its attribute ‘router:external’ must be ‘True’. The servers in the cluster will be connected to a private network and Magnum will create a router between this private network and the external network. This will allow the servers to download images, access discovery service, etc, and the containers to install packages, etc. In the opposite direction, floating IP’s will be allocated from the external network to provide access from the external internet to servers and the container services hosted in the cluster. This is a mandatory parameter and there is no default value.

- --public

Access to a ClusterTemplate is normally limited to the admin, owner or users within the same tenant as the owners. Setting this flag makes the ClusterTemplate public and accessible by other users. The default is not public.

- –server-type <server-type>

The servers in the cluster can be VM or baremetal. This parameter selects the type of server to create for the cluster. The default is ‘vm’. Possible values are ‘vm’, ‘bm’.

- –network-driver <network-driver>

The name of a network driver for providing the networks for the containers. Note that this is different and separate from the Neutron network for the cluster. The operation and networking model are specific to the particular driver; refer to the Networking section for more details. Supported network drivers and the default driver are:

COE

Network-Driver

Default

Kubernetes

flannel, calico

flannel

Swarm

docker, flannel

flannel

Mesos

docker

docker

Note that the network driver name is case sensitive.

- –volume-driver <volume-driver>

The name of a volume driver for managing the persistent storage for the containers. The functionality supported are specific to the driver. Supported volume drivers and the default driver are:

COE

Volume-Driver

Default

Kubernetes

cinder

No Driver

Swarm

rexray

No Driver

Mesos

rexray

No Driver

Note that the volume driver name is case sensitive.

- –dns-nameserver <dns-nameserver>

The DNS nameserver for the servers and containers in the cluster to use. This is configured in the private Neutron network for the cluster. The default is ‘8.8.8.8’.

- –flavor <flavor>

The nova flavor id for booting the node servers. The default is ‘m1.small’. This value can be overridden at cluster creation.

- –master-flavor <master-flavor>

The nova flavor id for booting the master or manager servers. The default is ‘m1.small’. This value can be overridden at cluster creation.

- –http-proxy <http-proxy>

The IP address for a proxy to use when direct http access from the servers to sites on the external internet is blocked. This may happen in certain countries or enterprises, and the proxy allows the servers and containers to access these sites. The format is a URL including a port number. The default is ‘None’.

- –https-proxy <https-proxy>

The IP address for a proxy to use when direct https access from the servers to sites on the external internet is blocked. This may happen in certain countries or enterprises, and the proxy allows the servers and containers to access these sites. The format is a URL including a port number. The default is ‘None’.

- –no-proxy <no-proxy>

When a proxy server is used, some sites should not go through the proxy and should be accessed normally. In this case, you can specify these sites as a comma separated list of IP’s. The default is ‘None’.

- –docker-volume-size <docker-volume-size>

If specified, container images will be stored in a cinder volume of the specified size in GB. Each cluster node will have a volume attached of the above size. If not specified, images will be stored in the compute instance’s local disk. For the ‘devicemapper’ storage driver, must specify volume and the minimum value is 3GB. For the ‘overlay’ and ‘overlay2’ storage driver, the minimum value is 1GB or None(no volume). This value can be overridden at cluster creation.

- –docker-storage-driver <docker-storage-driver>

The name of a driver to manage the storage for the images and the container’s writable layer. The default is ‘devicemapper’.

- –labels <KEY1=VALUE1,KEY2=VALUE2;KEY3=VALUE3…>

Arbitrary labels in the form of key=value pairs. The accepted keys and valid values are defined in the cluster drivers. They are used as a way to pass additional parameters that are specific to a cluster driver. Refer to the subsection on labels for a list of the supported key/value pairs and their usage. The value can be overridden at cluster creation.

- --tls-disabled

Transport Layer Security (TLS) is normally enabled to secure the cluster. In some cases, users may want to disable TLS in the cluster, for instance during development or to troubleshoot certain problems. Specifying this parameter will disable TLS so that users can access the COE endpoints without a certificate. The default is TLS enabled.

- --registry-enabled

Docker images by default are pulled from the public Docker registry, but in some cases, users may want to use a private registry. This option provides an alternative registry based on the Registry V2: Magnum will create a local registry in the cluster backed by swift to host the images. Refer to Docker Registry 2.0 for more details. The default is to use the public registry.

- --master-lb-enabled

Since multiple masters may exist in a cluster, a load balancer is created to provide the API endpoint for the cluster and to direct requests to the masters. In some cases, such as when the LBaaS service is not available, this option can be set to ‘false’ to create a cluster without the load balancer. In this case, one of the masters will serve as the API endpoint. The default is ‘true’, i.e. to create the load balancer for the cluster.

Labels¶

Labels is a general method to specify supplemental parameters that are specific to certain COE or associated with certain options. Their format is key/value pair and their meaning is interpreted by the drivers that uses them. The drivers do validate the key/value pairs. Their usage is explained in details in the appropriate sections, however, since there are many possible labels, the following table provides a summary to help give a clearer picture. The label keys in the table are linked to more details elsewhere in the user guide.

label key |

label value |

default |

|---|---|---|

IPv4 CIDR |

10.100.0.0/16 |

|

|

vxlan |

|

size of subnet to assign to node |

24 |

|

|

false |

|

|

“” |

|

|

“” |

|

(directory name) |

“” |

|

(file name) |

“” |

|

|

false |

|

see below |

see below |

|

|

true |

|

|

false |

|

see below |

see below |

|

|

true |

|

see below |

see below |

|

(rules CM name) |

“” |

|

|

spread |

|

see below |

see below |

|

see below |

see below |

|

|

false |

|

(any string) |

“admin” |

|

see below |

see below |

|

see below |

see below |

|

see below |

see below |

|

see below |

see below |

|

see below |

see below |

|

see below |

see below |

|

see below |

see below |

|

see below |

see below |

|

|

true |

|

see below |

see below |

|

see below |

see below |

|

|

false |

|

see below |

see below |

|

see below |

see below |

|

see below |

see below |

|

etcd storage volume size |

0 |

|

see below |

see below |

|

see below |

“” |

|

AZ for the cluster nodes |

“” |

|

see below |

false |

|

see below |

“” |

|

see below |

“ingress” |

|

see below |

see below |

|

see below |

see below |

|

see below |

see below |

|

extra kubelet args |

“” |

|

extra kubeapi args |

“” |

|

extra kubescheduler args |

“” |

|

extra kubecontroller args |

“” |

|

extra kubeproxy args |

“” |

|

|

“cgroupfs” |

|

|

see below |

|

service_cluster_ip_range |

IPv4 CIDR for k8s service portals |

10.254.0.0/16 |

see below |

true |

|

see below |

see below |

|

|

false |

|

see below |

“” |

|

see below |

see below |

|

see below |

see below |

|

see below |

see below |

|

see below |

see below |

|

|

see below |

|

see below |

“” |

|

|

false |

|

see below |

“draino” |

|

see below |

see below |

|

|

false |

|

see below |

see below |

|

see below |

see below |

|

see below |

see below |

|

see below |

see below |

|

see below |

see below |

|

|

true |

|

|

see below |

|

|

see below |

|

|

“” |

|

see below |

see below |

|

see below |

see below |

|

see below |

see below |

|

see below |

see below |

|

see below |

10.100.0.0/16 |

|

see below |

Off |

|

see below |

“” |

Cluster¶

A cluster (previously known as bay) is an instance of the ClusterTemplate of a COE. Magnum deploys a cluster by referring to the attributes defined in the particular ClusterTemplate as well as a few additional parameters for the cluster. Magnum deploys the orchestration templates provided by the cluster driver to create and configure all the necessary infrastructure. When ready, the cluster is a fully operational COE that can host containers.

Infrastructure¶

The infrastructure of the cluster consists of the resources provided by the various OpenStack services. Existing infrastructure, including infrastructure external to OpenStack, can also be used by the cluster, such as DNS, public network, public discovery service, Docker registry. The actual resources created depends on the COE type and the options specified; therefore you need to refer to the cluster driver documentation of the COE for specific details. For instance, the option ‘–master-lb-enabled’ in the ClusterTemplate will cause a load balancer pool along with the health monitor and floating IP to be created. It is important to distinguish resources in the IaaS level from resources in the PaaS level. For instance, the infrastructure networking in OpenStack IaaS is different and separate from the container networking in Kubernetes or Swarm PaaS.

Typical infrastructure includes the following.

- Servers

The servers host the containers in the cluster and these servers can be VM or bare metal. VM’s are provided by Nova. Since multiple VM’s are hosted on a physical server, the VM’s provide the isolation needed for containers between different tenants running on the same physical server. Bare metal servers are provided by Ironic and are used when peak performance with virtually no overhead is needed for the containers.

- Identity

Keystone provides the authentication and authorization for managing the cluster infrastructure.

- Network

Networking among the servers is provided by Neutron. Since COE currently are not multi-tenant, isolation for multi-tenancy on the networking level is done by using a private network for each cluster. As a result, containers belonging to one tenant will not be accessible to containers or servers of another tenant. Other networking resources may also be used, such as load balancer and routers. Networking among containers can be provided by Kuryr if needed.

- Storage

Cinder provides the block storage that can be used to host the containers and as persistent storage for the containers.

- Security

Barbican provides the storage of secrets such as certificates used for Transport Layer Security (TLS) within the cluster.

Life cycle¶

The set of life cycle operations on the cluster is one of the key value that Magnum provides, enabling clusters to be managed painlessly on OpenStack. The current operations are the basic CRUD operations, but more advanced operations are under discussion in the community and will be implemented as needed.

NOTE The OpenStack resources created for a cluster are fully accessible to the cluster owner. Care should be taken when modifying or reusing these resources to avoid impacting Magnum operations in unexpected manners. For instance, if you launch your own Nova instance on the cluster private network, Magnum would not be aware of this instance. Therefore, the cluster-delete operation will fail because Magnum would not delete the extra Nova instance and the private Neutron network cannot be removed while a Nova instance is still attached.

NOTE Currently Heat nested templates are used to create the resources; therefore if an error occurs, you can troubleshoot through Heat. For more help on Heat stack troubleshooting, refer to the Magnum Troubleshooting Guide.

Create¶

NOTE bay-<command> are the deprecated versions of these commands and are still support in current release. They will be removed in a future version. Any references to the term bay will be replaced in the parameters when using the ‘bay’ versions of the commands. For example, in ‘bay-create’ –baymodel is used as the baymodel parameter for this command instead of –cluster-template.

The ‘cluster-create’ command deploys a cluster, for example:

openstack coe cluster create mycluster \

--cluster-template mytemplate \

--node-count 8 \

--master-count 3

The ‘cluster-create’ operation is asynchronous; therefore you can initiate another ‘cluster-create’ operation while the current cluster is being created. If the cluster fails to be created, the infrastructure created so far may be retained or deleted depending on the particular orchestration engine. As a common practice, a failed cluster is retained during development for troubleshooting, but they are automatically deleted in production. The current cluster drivers use Heat templates and the resources of a failed ‘cluster-create’ are retained.

The definition and usage of the parameters for ‘cluster-create’ are as follows:

- <name>

Name of the cluster to create. If a name is not specified, a random name will be generated using a string and a number, for example “gamma-7-cluster”.

- –cluster-template <cluster-template>

The ID or name of the ClusterTemplate to use. This is a mandatory parameter. Once a ClusterTemplate is used to create a cluster, it cannot be deleted or modified until all clusters that use the ClusterTemplate have been deleted.

- –keypair <keypair>

The name of the SSH keypair to configure in the cluster servers for ssh access. You will need the key to be able to ssh to the servers in the cluster. The login name is specific to the cluster driver. If keypair is not provided it will attempt to use the value in the ClusterTemplate. If the ClusterTemplate is also missing a keypair value then an error will be returned. The keypair value provided here will override the keypair value from the ClusterTemplate.

- –node-count <node-count>

The number of servers that will serve as node in the cluster. The default is 1.

- –master-count <master-count>

The number of servers that will serve as master for the cluster. The default is 1. Set to more than 1 master to enable High Availability. If the option ‘–master-lb-enabled’ is specified in the ClusterTemplate, the master servers will be placed in a load balancer pool.

- –discovery-url <discovery-url>

The custom discovery url for node discovery. This is used by the COE to discover the servers that have been created to host the containers. The actual discovery mechanism varies with the COE. In some cases, Magnum fills in the server info in the discovery service. In other cases, if the discovery-url is not specified, Magnum will use the public discovery service at:

https://discovery.etcd.io

In this case, Magnum will generate a unique url here for each cluster and store the info for the servers.

- –timeout <timeout>

The timeout for cluster creation in minutes. The value expected is a positive integer and the default is 60 minutes. If the timeout is reached during cluster-create, the operation will be aborted and the cluster status will be set to ‘CREATE_FAILED’.

- --master-lb-enabled

Indicates whether created clusters should have a load balancer for master nodes or not.

List¶

The ‘cluster-list’ command lists all the clusters that belong to the tenant, for example:

openstack coe cluster list

Show¶

The ‘cluster-show’ command prints all the details of a cluster, for example:

openstack coe cluster show mycluster

The properties include those not specified by users that have been assigned default values and properties from new resources that have been created for the cluster.

Update¶

A cluster can be modified using the ‘cluster-update’ command, for example:

openstack coe cluster update mycluster replace node_count=8

The parameters are positional and their definition and usage are as follows.

- <cluster>

This is the first parameter, specifying the UUID or name of the cluster to update.

- <op>

This is the second parameter, specifying the desired change to be made to the cluster attributes. The allowed changes are ‘add’, ‘replace’ and ‘remove’.

- <attribute=value>

This is the third parameter, specifying the targeted attributes in the cluster as a list separated by blank space. To add or replace an attribute, you need to specify the value for the attribute. To remove an attribute, you only need to specify the name of the attribute. Currently the only attribute that can be replaced or removed is ‘node_count’. The attributes ‘name’, ‘master_count’ and ‘discovery_url’ cannot be replaced or delete. The table below summarizes the possible change to a cluster.

Attribute

add

replace

remove

node_count

no

add/remove nodes in default-worker nodegroup.

reset to default of 1

master_count

no

no

no

name

no

no

no

discovery_url

no

no

no

The ‘cluster-update’ operation cannot be initiated when another operation is in progress.

NOTE: The attribute names in cluster-update are slightly different from the corresponding names in the cluster-create command: the dash ‘-‘ is replaced by an underscore ‘_’. For instance, ‘node-count’ in cluster-create is ‘node_count’ in cluster-update.

Scale¶

Scaling a cluster means adding servers to or removing servers from the cluster. Currently, this is done through the ‘cluster-update’ operation by modifying the node-count attribute, for example:

openstack coe cluster update mycluster replace node_count=2

When some nodes are removed, Magnum will attempt to find nodes with no containers to remove. If some nodes with containers must be removed, Magnum will log a warning message.

Delete¶

The ‘cluster-delete’ operation removes the cluster by deleting all resources such as servers, network, storage; for example:

openstack coe cluster delete mycluster

The only parameter for the cluster-delete command is the ID or name of the cluster to delete. Multiple clusters can be specified, separated by a blank space.

If the operation fails, there may be some remaining resources that have not been deleted yet. In this case, you can troubleshoot through Heat. If the templates are deleted manually in Heat, you can delete the cluster in Magnum to clean up the cluster from Magnum database.

The ‘cluster-delete’ operation can be initiated when another operation is still in progress.

Python Client¶

Installation¶

Follow the instructions in the OpenStack Installation Guide to enable the repositories for your distribution:

Install using distribution packages for RHEL/CentOS/Fedora:

$ sudo yum install python-magnumclient

Install using distribution packages for Ubuntu/Debian:

$ sudo apt-get install python-magnumclient

Install using distribution packages for openSUSE and SUSE Enterprise Linux:

$ sudo zypper install python3-magnumclient

Verifying installation¶

Execute the openstack coe cluster list command to confirm that the client is installed and in the system path:

$ openstack coe cluster list

Using the command-line client¶

Refer to the OpenStack Command-Line Interface Reference for a full list of the commands supported by the openstack coe command-line client.

Horizon Interface¶

Magnum provides a Horizon plugin so that users can access the Container Infrastructure Management service through the OpenStack browser-based graphical UI. The plugin is available from magnum-ui. It is not installed by default in the standard Horizon service, but you can follow the instruction for installing a Horizon plugin.

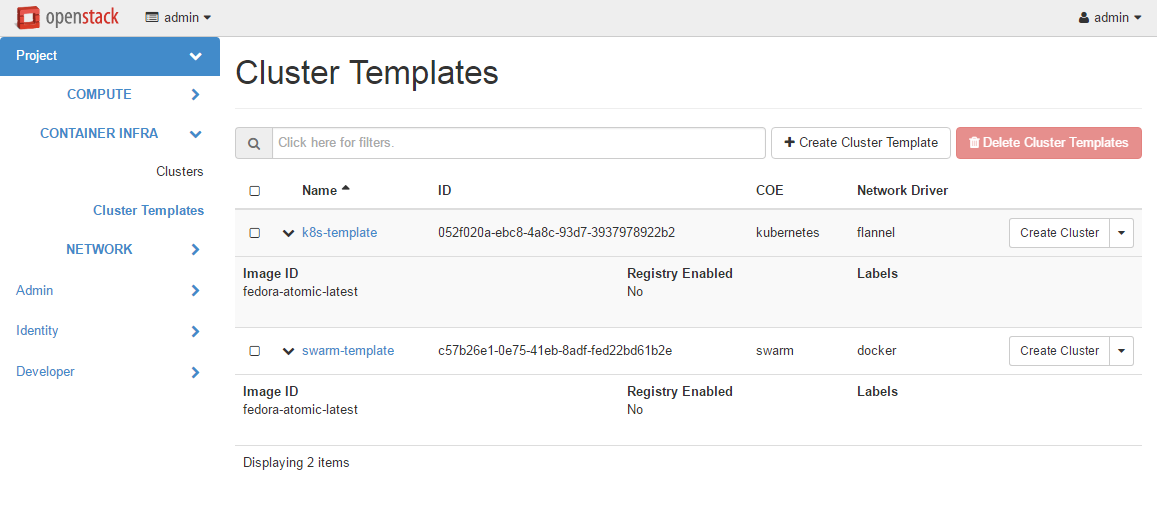

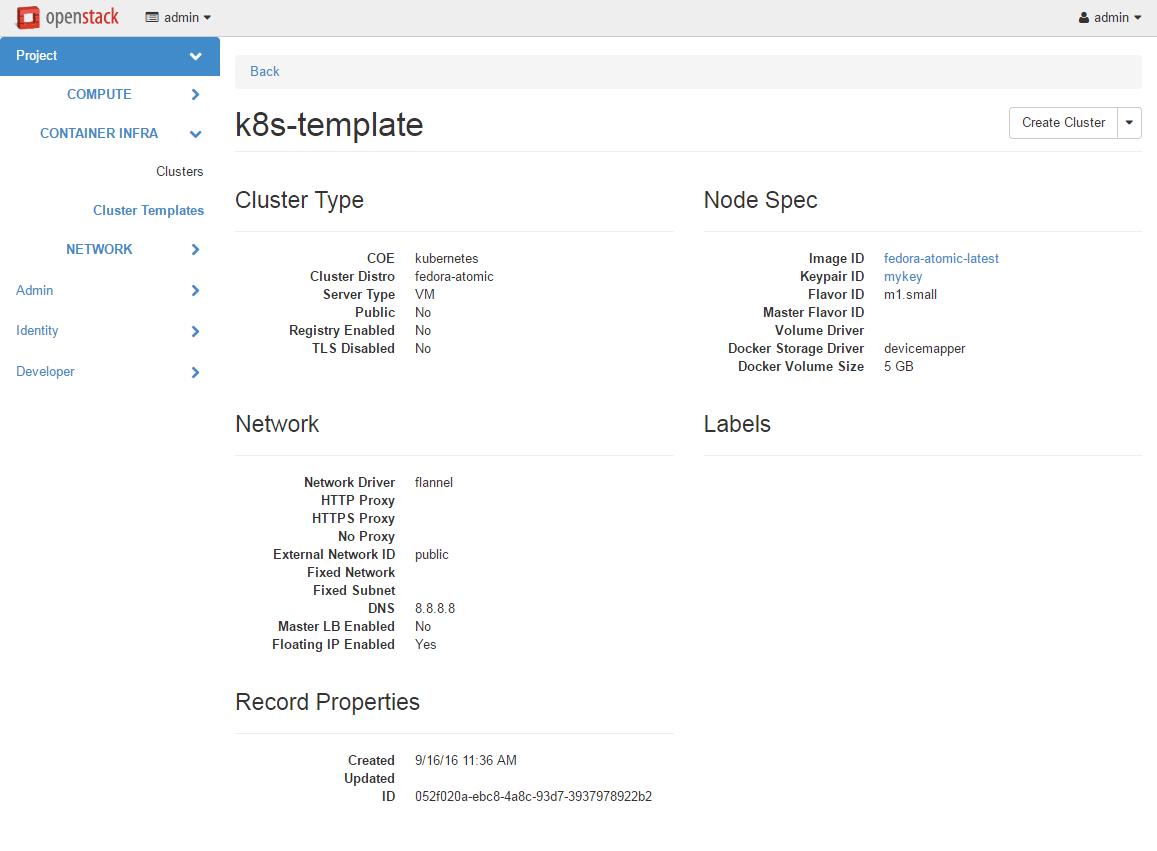

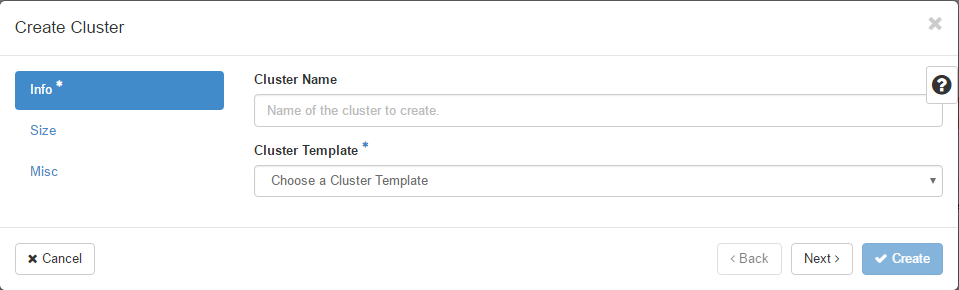

In Horizon, the container infrastructure panel is part of the ‘Project’ view and it currently supports the following operations:

View list of cluster templates

View details of a cluster template

Create a cluster template

Delete a cluster template

View list of clusters

View details of a cluster

Create a cluster

Delete a cluster

Get the Certificate Authority for a cluster

Sign a user key and obtain a signed certificate for accessing the secured COE API endpoint in a cluster.

Other operations are not yet supported and the CLI should be used for these.

Following is the screenshot of the Horizon view showing the list of cluster templates.

Following is the screenshot of the Horizon view showing the details of a cluster template.

Following is the screenshot of the dialog to create a new cluster.

Cluster Drivers¶

A cluster driver is a collection of python code, heat templates, scripts, images, and documents for a particular COE on a particular distro. Magnum presents the concept of ClusterTemplates and clusters. The implementation for a particular cluster type is provided by the cluster driver. In other words, the cluster driver provisions and manages the infrastructure for the COE. Magnum includes default drivers for the following COE and distro pairs:

COE |

distro |

|---|---|

Kubernetes |

Fedora Atomic |

Kubernetes |

CoreOS |

Swarm |

Fedora Atomic |

Mesos |

Ubuntu |

Magnum is designed to accommodate new cluster drivers to support custom COE’s and this section describes how a new cluster driver can be constructed and enabled in Magnum.

Directory structure¶

Magnum expects the components to be organized in the following directory structure under the directory ‘drivers’:

COE_Distro/

image/

templates/

api.py

driver.py

monitor.py

scale.py

template_def.py

version.py

The minimum required components are:

- driver.py

Python code that implements the controller operations for the particular COE. The driver must implement: Currently supported:

cluster_create,cluster_update,cluster_delete.- templates

A directory of orchestration templates for managing the lifecycle of clusters, including creation, configuration, update, and deletion. Currently only Heat templates are supported, but in the future other orchestration mechanism such as Ansible may be supported.

- template_def.py

Python code that maps the parameters from the ClusterTemplate to the input parameters for the orchestration and invokes the orchestration in the templates directory.

- version.py

Tracks the latest version of the driver in this directory. This is defined by a

versionattribute and is represented in the form of1.0.0. It should also include aDriverattribute with descriptive name such asfedora_swarm_atomic.

The remaining components are optional:

- image

Instructions for obtaining or building an image suitable for the COE.

- api.py

Python code to interface with the COE.

- monitor.py

Python code to monitor the resource utilization of the cluster.

- scale.py

Python code to scale the cluster by adding or removing nodes.

Sample cluster driver¶

To help developers in creating new COE drivers, a minimal cluster driver is provided as an example. The ‘docker’ cluster driver will simply deploy a single VM running Ubuntu with the latest Docker version installed. It is not a true cluster, but the simplicity will help to illustrate the key concepts.

To be filled in

Installing a cluster driver¶

To be filled in

Cluster Type Definition¶

There are three key pieces to a Cluster Type Definition:

Heat Stack template - The HOT file that Magnum will use to generate a cluster using a Heat Stack.

Template definition - Magnum’s interface for interacting with the Heat template.

Definition Entry Point - Used to advertise the available Cluster Types.

The Heat Stack Template¶

The Heat Stack Template is where most of the real work happens. The result of the Heat Stack Template should be a full Container Orchestration Environment.

The Template Definition¶

Template definitions are a mapping of Magnum object attributes and Heat template parameters, along with Magnum consumable template outputs. A Cluster Type Definition indicates which Cluster Types it can provide. Cluster Types are how Magnum determines which of the enabled Cluster Type Definitions it will use for a given cluster.

The Definition Entry Point¶

Entry points are a standard discovery and import mechanism for Python objects. Each Template Definition should have an Entry Point in the magnum.template_definitions group. This example exposes it’s Template Definition as example_template = example_template:ExampleTemplate in the magnum.template_definitions group.

Installing Cluster Templates¶

Because Cluster Type Definitions are basically Python projects, they can be worked with like any other Python project. They can be cloned from version control and installed or uploaded to a package index and installed via utilities such as pip.

Enabling a Cluster Type is as simple as adding it’s Entry Point to the enabled_definitions config option in magnum.conf.:

# Setup python environment and install Magnum

$ virtualenv .venv

$ . .venv/bin/active

(.venv)$ git clone https://opendev.org/openstack/magnum

(.venv)$ cd magnum

(.venv)$ python setup.py install

# List installed templates, notice default templates are enabled

(.venv)$ magnum-template-manage list-templates

Enabled Templates

magnum_vm_atomic_k8s: /home/example/.venv/local/lib/python2.7/site-packages/magnum/templates/kubernetes/kubecluster.yaml

magnum_vm_coreos_k8s: /home/example/.venv/local/lib/python2.7/site-packages/magnum/templates/kubernetes/kubecluster-coreos.yaml

Disabled Templates

# Install example template

(.venv)$ cd contrib/templates/example

(.venv)$ python setup.py install

# List installed templates, notice example template is disabled

(.venv)$ magnum-template-manage list-templates

Enabled Templates

magnum_vm_atomic_k8s: /home/example/.venv/local/lib/python2.7/site-packages/magnum/templates/kubernetes/kubecluster.yaml

magnum_vm_coreos_k8s: /home/example/.venv/local/lib/python2.7/site-packages/magnum/templates/kubernetes/kubecluster-coreos.yaml

Disabled Templates

example_template: /home/example/.venv/local/lib/python2.7/site-packages/ExampleTemplate-0.1-py2.7.egg/example_template/example.yaml

# Enable example template by setting enabled_definitions in magnum.conf

(.venv)$ sudo mkdir /etc/magnum

(.venv)$ sudo bash -c "cat > /etc/magnum/magnum.conf << END_CONF

[bay]

enabled_definitions=magnum_vm_atomic_k8s,magnum_vm_coreos_k8s,example_template

END_CONF"

# List installed templates, notice example template is now enabled

(.venv)$ magnum-template-manage list-templates

Enabled Templates

example_template: /home/example/.venv/local/lib/python2.7/site-packages/ExampleTemplate-0.1-py2.7.egg/example_template/example.yaml

magnum_vm_atomic_k8s: /home/example/.venv/local/lib/python2.7/site-packages/magnum/templates/kubernetes/kubecluster.yaml

magnum_vm_coreos_k8s: /home/example/.venv/local/lib/python2.7/site-packages/magnum/templates/kubernetes/kubecluster-coreos.yaml

Disabled Templates

# Use --details argument to get more details about each template

(.venv)$ magnum-template-manage list-templates --details

Enabled Templates

example_template: /home/example/.venv/local/lib/python2.7/site-packages/ExampleTemplate-0.1-py2.7.egg/example_template/example.yaml

Server_Type OS CoE

vm example example_coe

magnum_vm_atomic_k8s: /home/example/.venv/local/lib/python2.7/site-packages/magnum/templates/kubernetes/kubecluster.yaml

Server_Type OS CoE

vm fedora-atomic kubernetes

magnum_vm_coreos_k8s: /home/example/.venv/local/lib/python2.7/site-packages/magnum/templates/kubernetes/kubecluster-coreos.yaml

Server_Type OS CoE

vm coreos kubernetes

Disabled Templates

Heat Stack Templates¶

Heat Stack Templates are what Magnum passes to Heat to generate a cluster. For each ClusterTemplate resource in Magnum, a Heat stack is created to arrange all of the cloud resources needed to support the container orchestration environment. These Heat stack templates provide a mapping of Magnum object attributes to Heat template parameters, along with Magnum consumable stack outputs. Magnum passes the Heat Stack Template to the Heat service to create a Heat stack. The result is a full Container Orchestration Environment.

Choosing a COE¶

Magnum supports a variety of COE options, and allows more to be added over time as they gain popularity. As an operator, you may choose to support the full variety of options, or you may want to offer a subset of the available choices. Given multiple choices, your users can run one or more clusters, and each may use a different COE. For example, I might have multiple clusters that use Kubernetes, and just one cluster that uses Swarm. All of these clusters can run concurrently, even though they use different COE software.

Choosing which COE to use depends on what tools you want to use to manage your containers once you start your app. If you want to use the Docker tools, you may want to use the Swarm cluster type. Swarm will spread your containers across the various nodes in your cluster automatically. It does not monitor the health of your containers, so it can’t restart them for you if they stop. It will not automatically scale your app for you (as of Swarm version 1.2.2). You may view this as a plus. If you prefer to manage your application yourself, you might prefer swarm over the other COE options.

Kubernetes (as of v1.2) is more sophisticated than Swarm (as of v1.2.2). It offers an attractive YAML file description of a pod, which is a grouping of containers that run together as part of a distributed application. This file format allows you to model your application deployment using a declarative style. It has support for auto scaling and fault recovery, as well as features that allow for sophisticated software deployments, including canary deploys and blue/green deploys. Kubernetes is very popular, especially for web applications.

Apache Mesos is a COE that has been around longer than Kubernetes or Swarm. It allows for a variety of different frameworks to be used along with it, including Marathon, Aurora, Chronos, Hadoop, and a number of others.

The Apache Mesos framework design can be used to run alternate COE software directly on Mesos. Although this approach is not widely used yet, it may soon be possible to run Mesos with Kubernetes and Swarm as frameworks, allowing you to share the resources of a cluster between multiple different COEs. Until this option matures, we encourage Magnum users to create multiple clusters, and use the COE in each cluster that best fits the anticipated workload.

Finding the right COE for your workload is up to you, but Magnum offers you a choice to select among the prevailing leading options. Once you decide, see the next sections for examples of how to create a cluster with your desired COE.

Native Clients¶

Magnum preserves the native user experience with a COE and does not provide a separate API or client. This means you will need to use the native client for the particular cluster type to interface with the clusters. In the typical case, there are two clients to consider:

- COE level

This is the orchestration or management level such as Kubernetes, Swarm, Mesos and its frameworks.

- Container level

This is the low level container operation. Currently it is Docker for all clusters.

The clients can be CLI and/or browser-based. You will need to refer to the documentation for the specific native client and appropriate version for details, but following are some pointers for reference.

Kubernetes CLI is the tool ‘kubectl’, which can be simply copied from a node in the cluster or downloaded from the Kubernetes release. For instance, if the cluster is running Kubernetes release 1.2.0, the binary for ‘kubectl’ can be downloaded as and set up locally as follows:

curl -O https://storage.googleapis.com/kubernetes-release/release/v1.2.0/bin/linux/amd64/kubectl

chmod +x kubectl

sudo mv kubectl /usr/local/bin/kubectl

Kubernetes also provides a browser UI. If the cluster has the Kubernetes Dashboard running; it can be accessed using:

eval $(openstack coe cluster config <cluster-name>)

kubectl proxy

The browser can be accessed at http://localhost:8001/ui

For Swarm, the main CLI is ‘docker’, along with associated tools such as ‘docker-compose’, etc. Specific version of the binaries can be obtained from the Docker Engine installation.

Mesos cluster uses the Marathon framework and details on the Marathon UI can be found in the section Using Marathon.

Depending on the client requirement, you may need to use a version of the client that matches the version in the cluster. To determine the version of the COE and container, use the command ‘cluster-show’ and look for the attribute coe_version and container_version:

openstack coe cluster show k8s-cluster

+--------------------+------------------------------------------------------------+

| Property | Value |

+--------------------+------------------------------------------------------------+

| status | CREATE_COMPLETE |

| uuid | 04952c60-a338-437f-a7e7-d016d1d00e65 |

| stack_id | b7bf72ce-b08e-4768-8201-e63a99346898 |

| status_reason | Stack CREATE completed successfully |

| created_at | 2016-07-25T23:14:06+00:00 |

| updated_at | 2016-07-25T23:14:10+00:00 |

| create_timeout | 60 |

| coe_version | v1.2.0 |

| api_address | https://192.168.19.86:6443 |

| cluster_template_id| da2825a0-6d09-4208-b39e-b2db666f1118 |

| master_addresses | ['192.168.19.87'] |

| node_count | 1 |

| node_addresses | ['192.168.19.88'] |

| master_count | 1 |

| container_version | 1.9.1 |

| discovery_url | https://discovery.etcd.io/3b7fb09733429d16679484673ba3bfd5 |

| name | k8s-cluster |

+--------------------+------------------------------------------------------------+

Kubernetes¶

Kubernetes uses a range of terminology that we refer to in this guide. We define these common terms in the Glossary for your reference.

When Magnum deploys a Kubernetes cluster, it uses parameters defined in the ClusterTemplate and specified on the cluster-create command, for example:

openstack coe cluster template create k8s-cluster-template \

--image fedora-atomic-latest \

--keypair testkey \

--external-network public \

--dns-nameserver 8.8.8.8 \

--flavor m1.small \

--docker-volume-size 5 \

--network-driver flannel \

--coe kubernetes

openstack coe cluster create k8s-cluster \

--cluster-template k8s-cluster-template \

--master-count 3 \

--node-count 8

Refer to the ClusterTemplate and Cluster sections for the full list of parameters. Following are further details relevant to a Kubernetes cluster:

- Number of masters (master-count)

Specified in the cluster-create command to indicate how many servers will run as master in the cluster. Having more than one will provide high availability. The masters will be in a load balancer pool and the virtual IP address (VIP) of the load balancer will serve as the Kubernetes API endpoint. For external access, a floating IP associated with this VIP is available and this is the endpoint shown for Kubernetes in the ‘cluster-show’ command.

- Number of nodes (node-count)

Specified in the cluster-create command to indicate how many servers will run as node in the cluster to host the users’ pods. The nodes are registered in Kubernetes using the Nova instance name.

- Network driver (network-driver)

Specified in the ClusterTemplate to select the network driver. The supported and default network driver is ‘flannel’, an overlay network providing a flat network for all pods. Refer to the Networking section for more details.

- Volume driver (volume-driver)

Specified in the ClusterTemplate to select the volume driver. The supported volume driver is ‘cinder’, allowing Cinder volumes to be mounted in containers for use as persistent storage. Data written to these volumes will persist after the container exits and can be accessed again from other containers, while data written to the union file system hosting the container will be deleted. Refer to the Storage section for more details.

- Storage driver (docker-storage-driver)

Specified in the ClusterTemplate to select the Docker storage driver. The default is ‘devicemapper’. Refer to the Storage section for more details.

NOTE: For Fedora CoreOS driver, devicemapper is not supported.

- Image (image)

Specified in the ClusterTemplate to indicate the image to boot the servers. The image binary is loaded in Glance with the attribute ‘os_distro = fedora-atomic’. Current supported images are Fedora Atomic (download from Fedora ) and CoreOS (download from CoreOS )

- TLS (tls-disabled)

Transport Layer Security is enabled by default, so you need a key and signed certificate to access the Kubernetes API and CLI. Magnum handles its own key and certificate when interfacing with the Kubernetes cluster. In development mode, TLS can be disabled. Refer to the ‘Transport Layer Security’_ section for more details.

- What runs on the servers

The servers for Kubernetes master host containers in the ‘kube-system’ name space to run the Kubernetes proxy, scheduler and controller manager. The masters will not host users’ pods. Kubernetes API server, docker daemon, etcd and flannel run as systemd services. The servers for Kubernetes node also host a container in the ‘kube-system’ name space to run the Kubernetes proxy, while Kubernetes kubelet, docker daemon and flannel run as systemd services.

- Log into the servers

You can log into the master servers using the login ‘fedora’ and the keypair specified in the ClusterTemplate.

In addition to the common attributes in the ClusterTemplate, you can specify the following attributes that are specific to Kubernetes by using the labels attribute.

- admission_control_list

This label corresponds to Kubernetes parameter for the API server ‘–admission-control’. For more details, refer to the Admission Controllers. The default value corresponds to the one recommended in this doc for our current Kubernetes version.

- boot_volume_size

This label overrides the default_boot_volume_size of instances which is useful if your flavors are boot from volume only. The default value is 0, meaning that cluster instances will not boot from volume.

- boot_volume_type

This label overrides the default_boot_volume_type of instances which is useful if your flavors are boot from volume only. The default value is ‘’, meaning that Magnum will randomly select a Cinder volume type from all available options.

- etcd_volume_size

This label sets the size of a volume holding the etcd storage data. The default value is 0, meaning the etcd data is not persisted (no volume).

- etcd_volume_type

This label overrides the default_etcd_volume_type holding the etcd storage data. The default value is ‘’, meaning that Magnum will randomly select a Cinder volume type from all available options.

- container_infra_prefix

Prefix of all container images used in the cluster (kubernetes components, coredns, kubernetes-dashboard, node-exporter). For example, kubernetes-apiserver is pulled from docker.io/openstackmagnum/kubernetes-apiserver, with this label it can be changed to myregistry.example.com/mycloud/kubernetes-apiserver. Similarly, all other components used in the cluster will be prefixed with this label, which assumes an operator has cloned all expected images in myregistry.example.com/mycloud.

Images that must be mirrored:

docker.io/coredns/coredns:1.3.1

quay.io/coreos/etcd:v3.4.6

docker.io/k8scloudprovider/k8s-keystone-auth:v1.18.0

docker.io/k8scloudprovider/openstack-cloud-controller-manager:v1.18.0

gcr.io/google_containers/pause:3.1

Images that might be needed when ‘use_podman’ is ‘false’:

docker.io/openstackmagnum/kubernetes-apiserver

docker.io/openstackmagnum/kubernetes-controller-manager

docker.io/openstackmagnum/kubernetes-kubelet

docker.io/openstackmagnum/kubernetes-proxy

docker.io/openstackmagnum/kubernetes-scheduler

Images that might be needed:

k8s.gcr.io/hyperkube:v1.18.2

docker.io/grafana/grafana:5.1.5

docker.io/prom/node-exporter:latest

docker.io/prom/prometheus:latest

docker.io/traefik:v1.7.10

gcr.io/google_containers/kubernetes-dashboard-amd64:v1.5.1

gcr.io/google_containers/metrics-server-amd64:v0.3.6

k8s.gcr.io/node-problem-detector:v0.6.2

docker.io/planetlabs/draino:abf028a

docker.io/openstackmagnum/cluster-autoscaler:v1.18.1

quay.io/calico/cni:v3.13.1

quay.io/calico/pod2daemon-flexvol:v3.13.1

quay.io/calico/kube-controllers:v3.13.1

quay.io/calico/node:v3.13.1

quay.io/coreos/flannel-cni:v0.3.0

quay.io/coreos/flannel:v0.12.0-amd64

Images that might be needed if ‘monitoring_enabled’ is ‘true’:

quay.io/prometheus/alertmanager:v0.20.0

docker.io/squareup/ghostunnel:v1.5.2

docker.io/jettech/kube-webhook-certgen:v1.0.0

quay.io/coreos/prometheus-operator:v0.37.0

quay.io/coreos/configmap-reload:v0.0.1

quay.io/coreos/prometheus-config-reloader:v0.37.0

quay.io/prometheus/prometheus:v2.15.2

Images that might be needed if ‘cinder_csi_enabled’ is ‘true’:

docker.io/k8scloudprovider/cinder-csi-plugin:v1.18.0

quay.io/k8scsi/csi-attacher:v2.0.0

quay.io/k8scsi/csi-provisioner:v1.4.0

quay.io/k8scsi/csi-snapshotter:v1.2.2

quay.io/k8scsi/csi-resizer:v0.3.0

quay.io/k8scsi/csi-node-driver-registrar:v1.1.0

- hyperkube_prefix

This label allows users to specify a custom prefix for Hyperkube container source since official Hyperkube images have been discontinued for kube_tag greater than 1.18.x. If you wish you use 1.19.x onwards, you may want to use unofficial sources like docker.io/rancher/, ghcr.io/openstackmagnum/ or your own container registry. If container_infra_prefix label is defined, it still takes precedence over this label. Default: k8s.gcr.io/

- kube_tag

This label allows users to select a specific Kubernetes release based on its container tag for Fedora Atomic or Fedora CoreOS and Fedora Atomic (with use_podman=true label). If unset, the current Magnum version’s default Kubernetes release is installed. Take a look at the Wiki for a compatibility matrix between Kubernetes and Magnum Releases. Stein default: v1.11.6 Train default: v1.15.7 Ussuri default: v1.18.2 Victoria default: v1.18.16

- heapster_enabled

heapster_enabled is used to enable disable the installation of heapster. Ussuri default: false Train default: true

- metrics_server_chart_tag

Add metrics_server_chart_tag to select the version of the stable/metrics-server chart to install. Ussuri default: v2.8.8

- metrics_server_enabled

metrics_server_enabled is used to enable disable the installation of the metrics server. To use this service tiller_enabled must be true when using helm_client_tag<v3.0.0. Train default: true Stein default: true

- cloud_provider_tag

This label allows users to override the default openstack-cloud-controller-manager container image tag. Refer to openstack-cloud-controller-manager page for available tags. Stein default: v0.2.0 Train default: v1.15.0 Ussuri default: v1.18.0

- etcd_tag

This label allows users to select a specific etcd version, based on its container tag. If unset, the current Magnum version’s a default etcd version. Stein default: v3.2.7 Train default: 3.2.26 Ussuri default: v3.4.6

- coredns_tag

This label allows users to select a specific coredns version, based on its container tag. If unset, the current Magnum version’s a default etcd version. Stein default: 1.3.1 Train default: 1.3.1 Ussuri default: 1.6.6

- flannel_tag

This label allows users to select a specific flannel version, based on its container tag: Queens Rocky <https://quay.io/repository/coreos/flannel?tab=tags>`_ If unset, the default version will be used. Stein default: v0.10.0-amd64 Train default: v0.11.0-amd64 Ussuri default: v0.12.0-amd64

- flannel_cni_tag

This label allows users to select a specific flannel_cni version, based on its container tag. This container adds the cni plugins in the host under /opt/cni/bin. If unset, the current Magnum version’s a default flannel version. Stein default: v0.3.0 Train default: v0.3.0 Ussuri default: v0.3.0

- heat_container_agent_tag

This label allows users to select a specific heat_container_agent version, based on its container tag. Train-default: train-stable-3 Ussuri-default: ussuri-stable-1 Victoria-default: victoria-stable-1

- kube_dashboard_enabled

This label triggers the deployment of the kubernetes dashboard. The default value is 1, meaning it will be enabled.

- cert_manager_api

This label enables the kubernetes certificate manager api.

- kubelet_options

This label can hold any additional options to be passed to the kubelet. For more details, refer to the kubelet admin guide. By default no additional options are passed.

- kubeproxy_options

This label can hold any additional options to be passed to the kube proxy. For more details, refer to the kube proxy admin guide. By default no additional options are passed.

- kubecontroller_options

This label can hold any additional options to be passed to the kube controller manager. For more details, refer to the kube controller manager admin guide. By default no additional options are passed.

- kubeapi_options

This label can hold any additional options to be passed to the kube api server. For more details, refer to the kube api admin guide. By default no additional options are passed.

- kubescheduler_options

This label can hold any additional options to be passed to the kube scheduler. For more details, refer to the kube scheduler admin guide. By default no additional options are passed.

- influx_grafana_dashboard_enabled

The kubernetes dashboard comes with heapster enabled. If this label is set, an influxdb and grafana instance will be deployed, heapster will push data to influx and grafana will project them.

- cgroup_driver

This label tells kubelet which Cgroup driver to use. Ideally this should be identical to the Cgroup driver that Docker has been started with.

- cloud_provider_enabled

Add ‘cloud_provider_enabled’ label for the k8s_fedora_atomic driver. Defaults to the value of ‘cluster_user_trust’ (default: ‘false’ unless explicitly set to ‘true’ in magnum.conf due to CVE-2016-7404). Consequently, ‘cloud_provider_enabled’ label cannot be overridden to ‘true’ when ‘cluster_user_trust’ resolves to ‘false’. For specific kubernetes versions, if ‘cinder’ is selected as a ‘volume_driver’, it is implied that the cloud provider will be enabled since they are combined.

- cinder_csi_enabled

When ‘true’, out-of-tree Cinder CSI driver will be enabled. Requires ‘cinder’ to be selected as a ‘volume_driver’ and consequently also requires label ‘cloud_provider_enabled’ to be ‘true’ (see ‘cloud_provider_enabled’ section). Ussuri default: false Victoria default: true

- cinder_csi_plugin_tag

This label allows users to override the default cinder-csi-plugin container image tag. Refer to cinder-csi-plugin page for available tags. Train default: v1.16.0 Ussuri default: v1.18.0

- csi_attacher_tag

This label allows users to override the default container tag for CSI attacher. For additional tags, refer to CSI attacher page. Ussuri-default: v2.0.0

- csi_provisioner_tag

This label allows users to override the default container tag for CSI provisioner. For additional tags, refer to CSI provisioner page. Ussuri-default: v1.4.0

- csi_snapshotter_tag

This label allows users to override the default container tag for CSI snapshotter. For additional tags, refer to CSI snapshotter page. Ussuri-default: v1.2.2

- csi_resizer_tag

This label allows users to override the default container tag for CSI resizer. For additional tags, refer to CSI resizer page. Ussuri-default: v0.3.0

- csi_node_driver_registrar_tag

This label allows users to override the default container tag for CSI node driver registrar. For additional tags, refer to CSI node driver registrar page. Ussuri-default: v1.1.0

- keystone_auth_enabled

If this label is set to True, Kubernetes will support use Keystone for authorization and authentication.

- k8s_keystone_auth_tag

This label allows users to override the default k8s-keystone-auth container image tag. Refer to k8s-keystone-auth page for available tags. Stein default: v1.13.0 Train default: v1.14.0 Ussuri default: v1.18.0

- monitoring_enabled

Enable installation of cluster monitoring solution provided by the stable/prometheus-operator helm chart. To use this service tiller_enabled must be true when using helm_client_tag<v3.0.0. Default: false

- prometheus_adapter_enabled

Enable installation of cluster custom metrics provided by the stable/prometheus-adapter helm chart. This service depends on monitoring_enabled. Default: true

- prometheus_adapter_chart_tag

The stable/prometheus-adapter helm chart version to use. Train-default: 1.4.0

- prometheus_adapter_configmap

The name of the prometheus-adapter rules ConfigMap to use. Using this label will overwrite the default rules. Default: “”

- prometheus_operator_chart_tag

Add prometheus_operator_chart_tag to select version of the stable/prometheus-operator chart to install. When installing the chart, helm will use the default values of the tag defined and overwrite them based on the prometheus-operator-config ConfigMap currently defined. You must certify that the versions are compatible.

- tiller_enabled

If set to true, tiller will be deployed in the kube-system namespace. Ussuri default: false Train default: false

- tiller_tag

This label allows users to override the default container tag for Tiller. For additional tags, refer to Tiller page and look for tags<v3.0.0. Train default: v2.12.3 Ussuri default: v2.16.7

- tiller_namespace

The namespace in which Tiller and Helm v2 chart install jobs are installed. Default: magnum-tiller

- helm_client_url

URL of the helm client binary. Default: ‘’

- helm_client_sha256

SHA256 checksum of the helm client binary. Ussuri default: 018f9908cb950701a5d59e757653a790c66d8eda288625dbb185354ca6f41f6b

- helm_client_tag

This label allows users to override the default container tag for Helm client. For additional tags, refer to Helm client page. You must use identical tiller_tag if you wish to use Tiller (for helm_client_tag<v3.0.0). Ussuri default: v3.2.1

- master_lb_floating_ip_enabled

Controls if Magnum allocates floating IP for the load balancer of master nodes. This label only takes effect when the template property

master_lb_enabledis set. If not specified, the default value is the same as template propertyfloating_ip_enabled.- master_lb_allowed_cidrs

A CIDR list which can be used to control the access for the load balancer of master nodes. The input format is comma delimited list. For example, 192.168.0.0/16,10.0.0.0/24. Default: “” (which opens to 0.0.0.0/0)

- auto_healing_enabled

If set to true, auto healing feature will be enabled. Defaults to false.

- auto_healing_controller

This label sets the auto-healing service to be used. Currently

drainoandmagnum-auto-healerare supported. The default isdraino. For more details, see draino doc and magnum-auto-healer doc.- draino_tag

This label allows users to select a specific Draino version.

- magnum_auto_healer_tag

This label allows users to override the default magnum-auto-healer container image tag. Refer to magnum-auto-healer page for available tags. Stein default: v1.15.0 Train default: v1.15.0 Ussuri default: v1.18.0

- auto_scaling_enabled

If set to true, auto scaling feature will be enabled. Default: false.

- autoscaler_tag

This label allows users to override the default cluster-autoscaler container image tag. Refer to cluster-autoscaler page for available tags. Stein default: v1.0 Train default: v1.0 Ussuri default: v1.18.1

- npd_enabled

Set Node Problem Detector service enabled or disabled. Default: true

- node_problem_detector_tag

This label allows users to select a specific Node Problem Detector version.

- min_node_count

The minmium node count of the cluster when doing auto scaling or auto healing. Default: 1

- max_node_count

The maxmium node count of the cluster when doing auto scaling or auto healing.

- use_podman

Choose whether system containers etcd, kubernetes and the heat-agent will be installed with podman or atomic. This label is relevant for k8s_fedora drivers.

k8s_fedora_atomic_v1 defaults to use_podman=false, meaning atomic will be used pulling containers from docker.io/openstackmagnum. use_podman=true is accepted as well, which will pull containers by k8s.gcr.io.

k8s_fedora_coreos_v1 defaults and accepts only use_podman=true.

Note that, to use kubernetes version greater or equal to v1.16.0 with the k8s_fedora_atomic_v1 driver, you need to set use_podman=true. This is necessary since v1.16 dropped the –containerized flag in kubelet. https://github.com/kubernetes/kubernetes/pull/80043/files

- selinux_mode

Choose SELinux mode between enforcing, permissive and disabled. This label is currently only relevant for k8s_fedora drivers.

k8s_fedora_atomic_v1 driver defaults to selinux_mode=permissive because this was the only way atomic containers were able to start Kubernetes services. On the other hand, if the opt-in use_podman=true label is supplied, selinux_mode=enforcing is supported. Note that if selinux_mode=disabled is chosen, this only takes full effect once the instances are manually rebooted but they will be set to permissive mode in the meantime.

k8s_fedora_coreos_v1 driver defaults to selinux_mode=enforcing.

- container_runtime

The container runtime to use. Empty value means, use docker from the host. Since ussuri, apart from empty (host-docker), containerd is also an option.

- containerd_version

The containerd version to use as released in https://github.com/containerd/containerd/releases and https://storage.googleapis.com/cri-containerd-release/ Victoria default: 1.4.4 Ussuri default: 1.2.8

- containerd_tarball_url

Url with the tarball of containerd’s binaries.

- containerd_tarball_sha256

sha256 of the tarball fetched with containerd_tarball_url or from https://github.com/containerd/containerd/releases.

- kube_dashboard_version

Default version of Kubernetes dashboard. Train default: v1.8.3 Ussuri default: v2.0.0

- metrics_scraper_tag

The version of metrics-scraper used by kubernetes dashboard. Ussuri default: v1.0.4

- fixed_subnet_cidr

CIDR of the fixed subnet created by Magnum when a user has not specified an existing fixed_subnet during cluster creation. Ussuri default: 10.0.0.0/24

External load balancer for services¶

All Kubernetes pods and services created in the cluster are assigned IP addresses on a private container network so they can access each other and the external internet. However, these IP addresses are not accessible from an external network.

To publish a service endpoint externally so that the service can be accessed from the external network, Kubernetes provides the external load balancer feature. This is done by simply specifying in the service manifest the attribute “type: LoadBalancer”. Magnum enables and configures the Kubernetes plugin for OpenStack so that it can interface with Neutron and manage the necessary networking resources.

When the service is created, Kubernetes will add an external load balancer in front of the service so that the service will have an external IP address in addition to the internal IP address on the container network. The service endpoint can then be accessed with this external IP address. Kubernetes handles all the life cycle operations when pods are modified behind the service and when the service is deleted.

Refer to the Kubernetes External Load Balancer section for more details.

Ingress Controller¶

In addition to the LoadBalancer described above, Kubernetes can also be configured with an Ingress Controller. Ingress can provide load balancing, SSL termination and name-based virtual hosting.

Magnum allows selecting one of multiple controller options via the ‘ingress_controller’ label. Check the Kubernetes documentation to define your own Ingress resources.

Traefik: Traefik’s pods by default expose port 80 and 443 for http(s) traffic on the nodes they are running. In kubernetes cluster, these ports are closed by default. Cluster administrator needs to add a rule in the worker nodes security group. For example:

openstack security group rule create <SECURITY_GROUP> \

--protocol tcp \

--dst-port 80:80

openstack security group rule create <SECURITY_GROUP> \

--protocol tcp \

--dst-port 443:443

- ingress_controller

This label sets the Ingress Controller to be used. Currently ‘traefik’, ‘nginx’ and ‘octavia’ are supported. The default is ‘’, meaning no Ingress Controller is configured. For more details about octavia-ingress-controller please refer to cloud-provider-openstack document To use ‘nginx’ ingress controller, tiller_enabled must be true when using helm_client_tag<v3.0.0.

- ingress_controller_role

This label defines the role nodes should have to run an instance of the Ingress Controller. This gives operators full control on which nodes should be running an instance of the controller, and should be set in multiple nodes for availability. Default is ‘ingress’. An example of setting this in a Kubernetes node would be:

kubectl label node <node-name> role=ingress

This label is not used for octavia-ingress-controller.

- octavia_ingress_controller_tag

The image tag for octavia-ingress-controller. Train-default: v1.15.0

- nginx_ingress_controller_tag

The image tag for nginx-ingress-controller. Stein-default: 0.23.0 Train-default: 0.26.1 Ussuru-default: 0.26.1 Victoria-default: 0.32.0

- nginx_ingress_controller_chart_tag

The chart version for nginx-ingress-controller. Train-default: v1.24.7 Ussuru-default: v1.24.7 Victoria-default: v1.36.3

- traefik_ingress_controller_tag

The image tag for traefik_ingress_controller_tag. Stein-default: v1.7.10

DNS¶

CoreDNS is a critical service in Kubernetes cluster for service discovery. To get high availability for CoreDNS pod for Kubernetes cluster, now Magnum supports the autoscaling of CoreDNS using cluster-proportional-autoscaler. With cluster-proportional-autoscaler, the replicas of CoreDNS pod will be autoscaled based on the nodes and cores in the clsuter to prevent single point failure.

The scaling parameters and data points are provided via a ConfigMap to the autoscaler and it refreshes its parameters table every poll interval to be up to date with the latest desired scaling parameters. Using ConfigMap means user can do on-the-fly changes(including control mode) without rebuilding or restarting the scaler containers/pods. Please refer Autoscale the DNS Service in a Cluster for more info.

Keystone authN and authZ¶

Now cloud-provider-openstack provides a good webhook between OpenStack Keystone and Kubernetes, so that user can do authorization and authentication with a Keystone user/role against the Kubernetes cluster. If label keystone-auth-enabled is set True, then user can use their OpenStack credentials and roles to access resources in Kubernetes.

Assume you have already got the configs with command eval $(openstack coe cluster config <cluster ID>), then to configure the kubectl client, the following commands are needed:

Run kubectl config set-credentials openstackuser –auth-provider=openstack

Run kubectl config set-context –cluster=<your cluster name> –user=openstackuser openstackuser@kubernetes

Run kubectl config use-context openstackuser@kubernetes to activate the context

NOTE: Please make sure the version of kubectl is 1.8+ and make sure OS_DOMAIN_NAME is included in the rc file.

Now try kubectl get pods, you should be able to see response from Kubernetes based on current user’s role.

Please refer the doc of k8s-keystone-auth in cloud-provider-openstack for more information.

Swarm¶

A Swarm cluster is a pool of servers running Docker daemon that is managed as a single Docker host. One or more Swarm managers accepts the standard Docker API and manage this pool of servers. Magnum deploys a Swarm cluster using parameters defined in the ClusterTemplate and specified on the ‘cluster-create’ command, for example:

openstack coe cluster template create swarm-cluster-template \

--image fedora-atomic-latest \

--keypair testkey \

--external-network public \

--dns-nameserver 8.8.8.8 \

--flavor m1.small \

--docker-volume-size 5 \

--coe swarm

openstack coe cluster create swarm-cluster \

--cluster-template swarm-cluster-template \

--master-count 3 \

--node-count 8

Refer to the ClusterTemplate and Cluster sections for the full list of parameters. Following are further details relevant to Swarm:

- What runs on the servers

There are two types of servers in the Swarm cluster: managers and nodes. The Docker daemon runs on all servers. On the servers for manager, the Swarm manager is run as a Docker container on port 2376 and this is initiated by the systemd service swarm-manager. Etcd is also run on the manager servers for discovery of the node servers in the cluster. On the servers for node, the Swarm agent is run as a Docker container on port 2375 and this is initiated by the systemd service swarm-agent. On start up, the agents will register themselves in etcd and the managers will discover the new node to manage.

- Number of managers (master-count)

Specified in the cluster-create command to indicate how many servers will run as managers in the cluster. Having more than one will provide high availability. The managers will be in a load balancer pool and the load balancer virtual IP address (VIP) will serve as the Swarm API endpoint. A floating IP associated with the load balancer VIP will serve as the external Swarm API endpoint. The managers accept the standard Docker API and perform the corresponding operation on the servers in the pool. For instance, when a new container is created, the managers will select one of the servers based on some strategy and schedule the containers there.

- Number of nodes (node-count)

Specified in the cluster-create command to indicate how many servers will run as nodes in the cluster to host your Docker containers. These servers will register themselves in etcd for discovery by the managers, and interact with the managers. Docker daemon is run locally to host containers from users.

- Network driver (network-driver)

Specified in the ClusterTemplate to select the network driver. The supported drivers are ‘docker’ and ‘flannel’, with ‘docker’ as the default. With the ‘docker’ driver, containers are connected to the ‘docker0’ bridge on each node and are assigned local IP address. With the ‘flannel’ driver, containers are connected to a flat overlay network and are assigned IP address by Flannel. Refer to the Networking section for more details.

- Volume driver (volume-driver)

Specified in the ClusterTemplate to select the volume driver to provide persistent storage for containers. The supported volume driver is ‘rexray’. The default is no volume driver. When ‘rexray’ or other volume driver is deployed, you can use the Docker ‘volume’ command to create, mount, unmount, delete volumes in containers. Cinder block storage is used as the backend to support this feature. Refer to the Storage section for more details.

- Storage driver (docker-storage-driver)

Specified in the ClusterTemplate to select the Docker storage driver. The default is ‘devicemapper’. Refer to the Storage section for more details.

- Image (image)

Specified in the ClusterTemplate to indicate the image to boot the servers for the Swarm manager and node. The image binary is loaded in Glance with the attribute ‘os_distro = fedora-atomic’. Current supported image is Fedora Atomic (download from Fedora )

- TLS (tls-disabled)

Transport Layer Security is enabled by default to secure the Swarm API for access by both the users and Magnum. You will need a key and a signed certificate to access the Swarm API and CLI. Magnum handles its own key and certificate when interfacing with the Swarm cluster. In development mode, TLS can be disabled. Refer to the ‘Transport Layer Security’_ section for details on how to create your key and have Magnum sign your certificate.

- Log into the servers

You can log into the manager and node servers with the account ‘fedora’ and the keypair specified in the ClusterTemplate.

In addition to the common attributes in the ClusterTemplate, you can specify the following attributes that are specific to Swarm by using the labels attribute.

- swarm_strategy

This label corresponds to Swarm parameter for master ‘–strategy’. For more details, refer to the Swarm Strategy. Valid values for this label are:

spread

binpack

random

Mesos¶

A Mesos cluster consists of a pool of servers running as Mesos slaves, managed by a set of servers running as Mesos masters. Mesos manages the resources from the slaves but does not itself deploy containers. Instead, one of more Mesos frameworks running on the Mesos cluster would accept user requests on their own endpoint, using their particular API. These frameworks would then negotiate the resources with Mesos and the containers are deployed on the servers where the resources are offered.

Magnum deploys a Mesos cluster using parameters defined in the ClusterTemplate and specified on the ‘cluster-create’ command, for example:

openstack coe cluster template create mesos-cluster-template \

--image ubuntu-mesos \

--keypair testkey \

--external-network public \

--dns-nameserver 8.8.8.8 \

--flavor m1.small \

--coe mesos

openstack coe cluster create mesos-cluster \

--cluster-template mesos-cluster-template \

--master-count 3 \

--node-count 8

Refer to the ClusterTemplate and Cluster sections for the full list of parameters. Following are further details relevant to Mesos:

- What runs on the servers

There are two types of servers in the Mesos cluster: masters and slaves. The Docker daemon runs on all servers. On the servers for master, the Mesos master is run as a process on port 5050 and this is initiated by the upstart service ‘mesos-master’. Zookeeper is also run on the master servers, initiated by the upstart service ‘zookeeper’. Zookeeper is used by the master servers for electing the leader among the masters, and by the slave servers and frameworks to determine the current leader. The framework Marathon is run as a process on port 8080 on the master servers, initiated by the upstart service ‘marathon’. On the servers for slave, the Mesos slave is run as a process initiated by the upstart service ‘mesos-slave’.

- Number of master (master-count)

Specified in the cluster-create command to indicate how many servers will run as masters in the cluster. Having more than one will provide high availability. If the load balancer option is specified, the masters will be in a load balancer pool and the load balancer virtual IP address (VIP) will serve as the Mesos API endpoint. A floating IP associated with the load balancer VIP will serve as the external Mesos API endpoint.

- Number of agents (node-count)

Specified in the cluster-create command to indicate how many servers will run as Mesos slave in the cluster. Docker daemon is run locally to host containers from users. The slaves report their available resources to the master and accept request from the master to deploy tasks from the frameworks. In this case, the tasks will be to run Docker containers.

- Network driver (network-driver)

Specified in the ClusterTemplate to select the network driver. Currently ‘docker’ is the only supported driver: containers are connected to the ‘docker0’ bridge on each node and are assigned local IP address. Refer to the Networking section for more details.

- Volume driver (volume-driver)

Specified in the ClusterTemplate to select the volume driver to provide persistent storage for containers. The supported volume driver is ‘rexray’. The default is no volume driver. When ‘rexray’ or other volume driver is deployed, you can use the Docker ‘volume’ command to create, mount, unmount, delete volumes in containers. Cinder block storage is used as the backend to support this feature. Refer to the Storage section for more details.

- Storage driver (docker-storage-driver)

This is currently not supported for Mesos.

- Image (image)

Specified in the ClusterTemplate to indicate the image to boot the servers for the Mesos master and slave. The image binary is loaded in Glance with the attribute ‘os_distro = ubuntu’. You can download the ready-built image, or you can create the image as described below in the Building Mesos image section.

- TLS (tls-disabled)

Transport Layer Security is currently not implemented yet for Mesos.

- Log into the servers

You can log into the manager and node servers with the account ‘ubuntu’ and the keypair specified in the ClusterTemplate.

In addition to the common attributes in the baymodel, you can specify the following attributes that are specific to Mesos by using the labels attribute.

- rexray_preempt

When the volume driver ‘rexray’ is used, you can mount a data volume backed by Cinder to a host to be accessed by a container. In this case, the label ‘rexray_preempt’ can optionally be set to True or False to enable any host to take control of the volume regardless of whether other hosts are using the volume. This will in effect unmount the volume from the current host and remount it on the new host. If this label is set to false, then rexray will ensure data safety for locking the volume before remounting. The default value is False.

- mesos_slave_isolation

This label corresponds to the Mesos parameter for slave ‘–isolation’. The isolators are needed to provide proper isolation according to the runtime configurations specified in the container image. For more details, refer to the Mesos configuration and the Mesos container image support. Valid values for this label are:

filesystem/posix

filesystem/linux

filesystem/shared

posix/cpu

posix/mem

posix/disk

cgroups/cpu

cgroups/mem

docker/runtime

namespaces/pid

- mesos_slave_image_providers

This label corresponds to the Mesos parameter for agent ‘–image_providers’, which tells Mesos containerizer what types of container images are allowed. For more details, refer to the Mesos configuration and the Mesos container image support. Valid values are:

appc

docker

appc,docker

- mesos_slave_work_dir

This label corresponds to the Mesos parameter ‘–work_dir’ for slave. For more details, refer to the Mesos configuration. Valid value is a directory path to use as the work directory for the framework, for example:

mesos_slave_work_dir=/tmp/mesos

- mesos_slave_executor_env_variables