Open vSwitch: High availability using VRRP¶

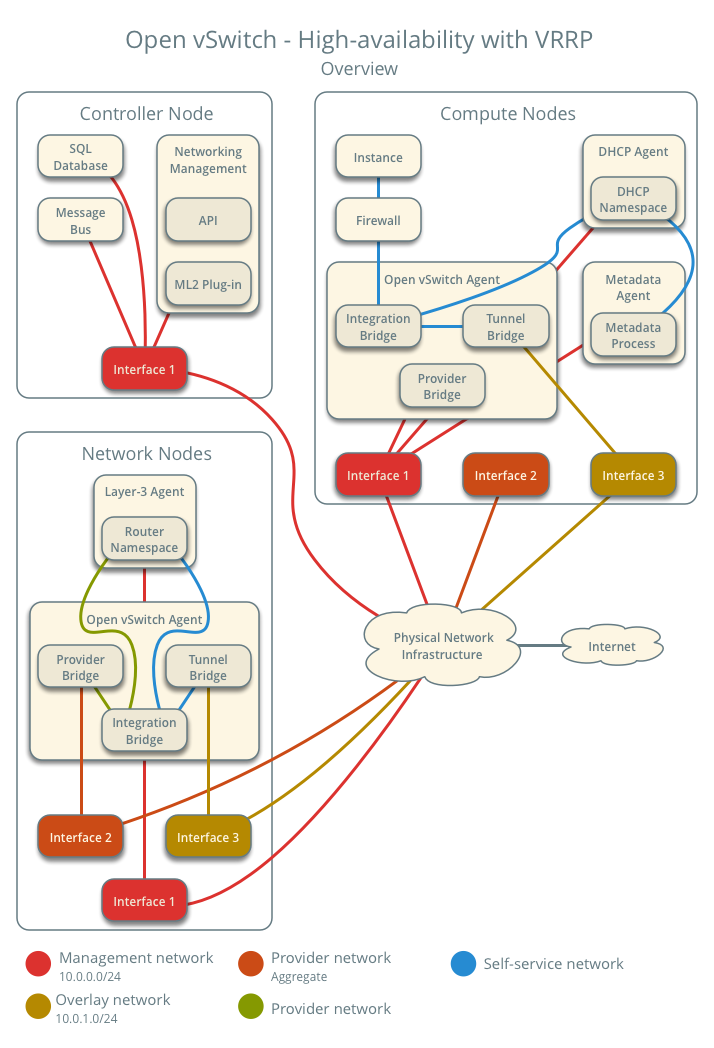

This architecture example augments the self-service deployment example with a high-availability mechanism using the Virtual Router Redundancy Protocol (VRRP) via keepalived and provides failover of routing for self-service networks. It requires a minimum of two network nodes because VRRP creates one master (active) instance and at least one backup instance of each router.

During normal operation, keepalived on the master router periodically transmits heartbeat packets over a hidden network that connects all VRRP routers for a particular project. Each project with VRRP routers uses a separate hidden network. By default this network uses the first value in the tenant_network_types option in the ml2_conf.ini file. For additional control, you can specify the self-service network type and physical network name for the hidden network using the l3_ha_network_type and l3_ha_network_name options in the neutron.conf file.

If keepalived on the backup router stops receiving heartbeat packets, it assumes failure of the master router and promotes the backup router to master router by configuring IP addresses on the interfaces in the qrouter namespace. In environments with more than one backup router, keepalived on the backup router with the next highest priority promotes that backup router to master router.

注釈

This high-availability mechanism configures VRRP using the same priority for all routers. Therefore, VRRP promotes the backup router with the highest IP address to the master router.

Interruption of VRRP heartbeat traffic between network nodes, typically due to a network interface or physical network infrastructure failure, triggers a failover. Restarting the layer-3 agent, or failure of it, does not trigger a failover providing keepalived continues to operate.

Consider the following attributes of this high-availability mechanism to determine practicality in your environment:

- Instance network traffic on self-service networks using a particular router only traverses the master instance of that router. Thus, resource limitations of a particular network node can impact all master instances of routers on that network node without triggering failover to another network node. However, you can configure the scheduler to distribute the master instance of each router uniformly across a pool of network nodes to reduce the chance of resource contention on any particular network node.

- Only supports self-service networks using a router. Provider networks operate at layer-2 and rely on physical network infrastructure for redundancy.

- For instances with a floating IPv4 address, maintains state of network connections during failover as a side effect of 1:1 static NAT. The mechanism does not actually implement connection tracking.

For production deployments, we recommend at least three network nodes with sufficient resources to handle network traffic for the entire environment if one network node fails. Also, the remaining two nodes can continue to provide redundancy.

前提¶

Add one network node with the following components:

- Three network interfaces: management, provider, and overlay.

- OpenStack Networking layer-2 agent, layer-3 agent, and any dependencies.

注釈

You can keep the DHCP and metadata agents on each compute node or move them to the network nodes.

アーキテクチャー¶

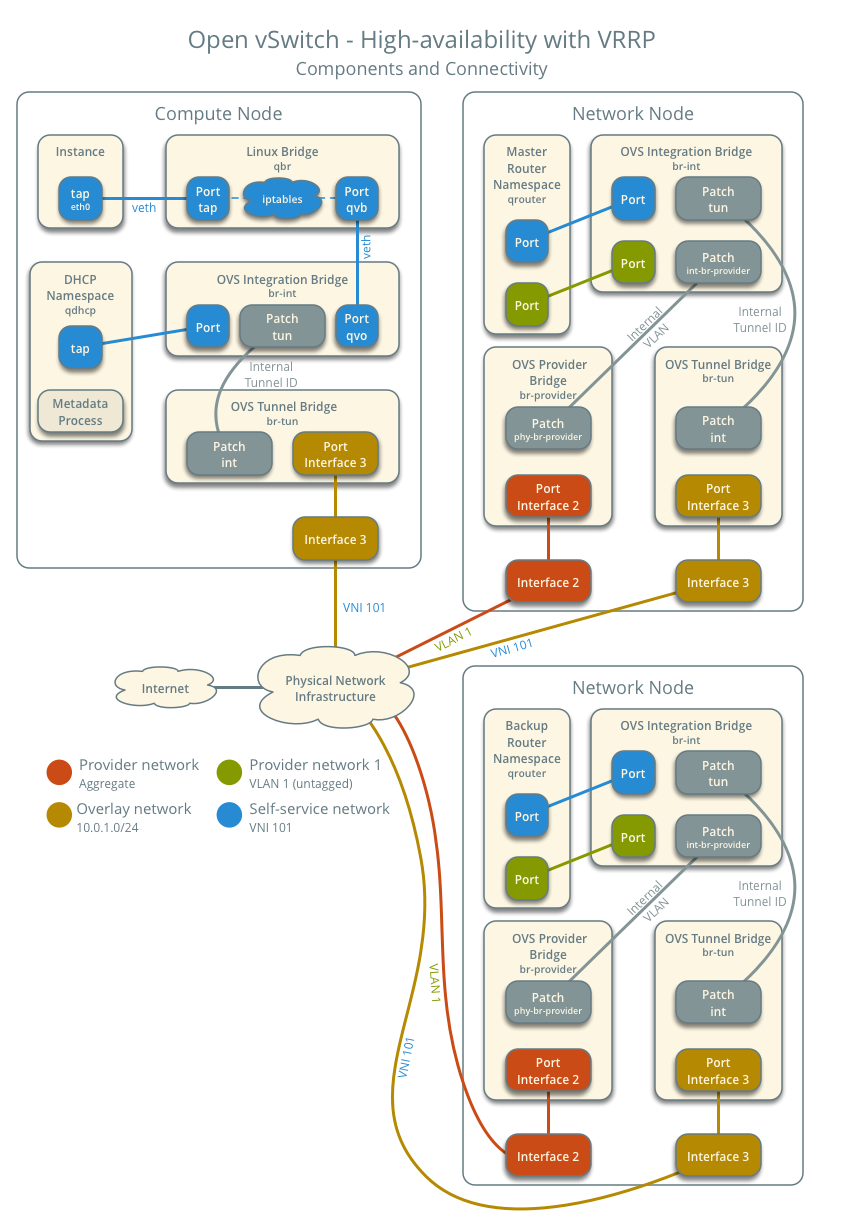

The following figure shows components and connectivity for one self-service network and one untagged (flat) network. The master router resides on network node 1. In this particular case, the instance resides on the same compute node as the DHCP agent for the network. If the DHCP agent resides on another compute node, the latter only contains a DHCP namespace and Linux bridge with a port on the overlay physical network interface.

設定例¶

Use the following example configuration as a template to add support for high-availability using VRRP to an existing operational environment that supports self-service networks.

コントローラーノード¶

neutron.conf ファイル:

Enable VRRP.

[DEFAULT] l3_ha = True

Restart the following services:

サーバー

Network node 1¶

変更なし。

Network node 2¶

Install the Networking service OVS layer-2 agent and layer-3 agent.

Install OVS.

In the neutron.conf file, configure common options:

[DEFAULT] core_plugin = ml2 auth_strategy = keystone rpc_backend = rabbit notify_nova_on_port_status_changes = true notify_nova_on_port_data_changes = true [database] ... [keystone_authtoken] ... [oslo_messaging_rabbit] ... [nova] ...

See the Installation Guide for your OpenStack release to obtain the appropriate configuration for the [database], [keystone_authtoken], [oslo_messaging_rabbit], and [nova] sections.

以下のサービスを実行します。

- OVS

Create the OVS provider bridge br-provider:

$ ovs-vsctl add-br br-providerIn the openvswitch_agent.ini file, configure the layer-2 agent.

[ovs] bridge_mappings = provider:br-provider local_ip = OVERLAY_INTERFACE_IP_ADDRESS [agent] tunnel_types = vxlan l2_population = true [securitygroup] firewall_driver = iptables_hybrid

Replace OVERLAY_INTERFACE_IP_ADDRESS with the IP address of the interface that handles VXLAN overlays for self-service networks.

In the l3_agent.ini file, configure the layer-3 agent.

[DEFAULT] interface_driver = openvswitch external_network_bridge =

注釈

external_network_bridge オプションには意図的に値を指定していません。

以下のサービスを実行します。

Open vSwitch エージェント

L3 エージェント

コンピュートノード¶

変更なし。

サービスの動作検証¶

管理プロジェクトのクレデンシャルを読み込みます。

Verify presence and operation of the agents.

$ neutron agent-list +--------------------------------------+--------------------+----------+-------------------+-------+----------------+---------------------------+ | id | agent_type | host | availability_zone | alive | admin_state_up | binary | +--------------------------------------+--------------------+----------+-------------------+-------+----------------+---------------------------+ | 1236bbcb-e0ba-48a9-80fc-81202ca4fa51 | Metadata agent | compute2 | | :-) | True | neutron-metadata-agent | | 457d6898-b373-4bb3-b41f-59345dcfb5c5 | Open vSwitch agent | compute2 | | :-) | True | neutron-openvswitch-agent | | 71f15e84-bc47-4c2a-b9fb-317840b2d753 | DHCP agent | compute2 | nova | :-) | True | neutron-dhcp-agent | | 8805b962-de95-4e40-bdc2-7a0add7521e8 | L3 agent | network1 | nova | :-) | True | neutron-l3-agent | | a33cac5a-0266-48f6-9cac-4cef4f8b0358 | Open vSwitch agent | network1 | | :-) | True | neutron-openvswitch-agent | | a6c69690-e7f7-4e56-9831-1282753e5007 | Metadata agent | compute1 | | :-) | True | neutron-metadata-agent | | af11f22f-a9f4-404f-9fd8-cd7ad55c0f68 | DHCP agent | compute1 | nova | :-) | True | neutron-dhcp-agent | | bcfc977b-ec0e-4ba9-be62-9489b4b0e6f1 | Open vSwitch agent | compute1 | | :-) | True | neutron-openvswitch-agent | | 7f00d759-f2c9-494a-9fbf-fd9118104d03 | Open vSwitch agent | network2 | | :-) | True | neutron-openvswitch-agent | | b28d8818-9e32-4888-930b-29addbdd2ef9 | L3 agent | network2 | nova | :-) | True | neutron-l3-agent | +--------------------------------------+--------------------+----------+-------------------+-------+----------------+---------------------------+

初期ネットワークの作成¶

Similar to the self-service deployment example, this configuration supports multiple VXLAN self-service networks. After enabling high-availability, all additional routers use VRRP. The following procedure creates an additional self-service network and router. The Networking service also supports adding high-availability to existing routers. However, the procedure requires administratively disabling and enabling each router which temporarily interrupts network connectivity for self-service networks with interfaces on that router.

Source a regular (non-administrative) project credentials.

Create a self-service network.

$ neutron net-create selfservice2 Created a new network: +-------------------------+--------------------------------------+ | Field | Value | +-------------------------+--------------------------------------+ | admin_state_up | True | | availability_zone_hints | | | availability_zones | | | description | | | id | 7ebc353c-6c8f-461f-8ada-01b9f14beb18 | | ipv4_address_scope | | | ipv6_address_scope | | | mtu | 1450 | | name | selfservice2 | | port_security_enabled | True | | router:external | False | | shared | False | | status | ACTIVE | | subnets | | | tags | | | tenant_id | f986edf55ae945e2bef3cb4bfd589928 | +-------------------------+--------------------------------------+

Create a IPv4 subnet on the self-service network.

$ neutron subnet-create --name selfservice2-v4 --ip-version 4 \ --dns-nameserver 8.8.4.4 selfservice2 192.168.2.0/24 Created a new subnet: +-------------------+--------------------------------------------------+ | Field | Value | +-------------------+--------------------------------------------------+ | allocation_pools | {"start": "192.168.2.2", "end": "192.168.2.254"} | | cidr | 192.168.2.0/24 | | description | | | dns_nameservers | 8.8.4.4 | | enable_dhcp | True | | gateway_ip | 192.168.2.1 | | host_routes | | | id | 12a41804-18bf-4cec-bde8-174cbdbf1573 | | ip_version | 4 | | ipv6_address_mode | | | ipv6_ra_mode | | | name | selfservice2-v4 | | network_id | 7ebc353c-6c8f-461f-8ada-01b9f14beb18 | | subnetpool_id | | | tenant_id | f986edf55ae945e2bef3cb4bfd589928 | +-------------------+--------------------------------------------------+

Create a IPv6 subnet on the self-service network.

$ neutron subnet-create --name selfservice2-v6 --ip-version 6 \ --ipv6-address-mode slaac --ipv6-ra-mode slaac \ --dns-nameserver 2001:4860:4860::8844 selfservice2 \ fd00:192:168:2::/64 Created a new subnet: +-------------------+-----------------------------------------------------------------------------+ | Field | Value | +-------------------+-----------------------------------------------------------------------------+ | allocation_pools | {"start": "fd00:192:168:2::2", "end": "fd00:192:168:2:ffff:ffff:ffff:ffff"} | | cidr | fd00:192:168:2::/64 | | description | | | dns_nameservers | 2001:4860:4860::8844 | | enable_dhcp | True | | gateway_ip | fd00:192:168:2::1 | | host_routes | | | id | b0f122fe-0bf9-4f31-975d-a47e58aa88e3 | | ip_version | 6 | | ipv6_address_mode | slaac | | ipv6_ra_mode | slaac | | name | selfservice2-v6 | | network_id | 7ebc353c-6c8f-461f-8ada-01b9f14beb18 | | subnetpool_id | | | tenant_id | f986edf55ae945e2bef3cb4bfd589928 | +-------------------+-----------------------------------------------------------------------------+

Create a router.

$ neutron router-create router2 Created a new router: +-------------------------+--------------------------------------+ | Field | Value | +-------------------------+--------------------------------------+ | admin_state_up | True | | availability_zone_hints | | | availability_zones | | | description | | | external_gateway_info | | | id | b6206312-878e-497c-8ef7-eb384f8add96 | | name | router2 | | routes | | | status | ACTIVE | | tenant_id | f986edf55ae945e2bef3cb4bfd589928 | +-------------------------+--------------------------------------+

Add the IPv4 and IPv6 subnets as interfaces on the router.

$ neutron router-interface-add router2 selfservice2-v4 Added interface da3504ad-ba70-4b11-8562-2e6938690878 to router router2. $ neutron router-interface-add router2 selfservice2-v6 Added interface 442e36eb-fce3-4cb5-b179-4be6ace595f0 to router router2.

プロバイダーネットワークをルーターのゲートウェイとして追加します。

$ neutron router-gateway-set router2 provider1 Set gateway for router router2

ネットワーク動作の検証¶

管理プロジェクトのクレデンシャルを読み込みます。

Verify creation of the internal high-availability network that handles VRRP heartbeat traffic.

$ neutron net-list +--------------------------------------+----------------------------------------------------+----------------------------------------------------------+ | id | name | subnets | +--------------------------------------+----------------------------------------------------+----------------------------------------------------------+ | 1b8519c1-59c4-415c-9da2-a67d53c68455 | HA network tenant f986edf55ae945e2bef3cb4bfd589928 | 6843314a-1e76-4cc9-94f5-c64b7a39364a 169.254.192.0/18 | +--------------------------------------+----------------------------------------------------+----------------------------------------------------------+

On each network node, verify creation of a qrouter namespace with the same ID.

ネットワークノード 1:

# ip netns qrouter-b6206312-878e-497c-8ef7-eb384f8add96

ネットワークノード 2:

# ip netns qrouter-b6206312-878e-497c-8ef7-eb384f8add96

注釈

The namespace for router 1 from Linux bridge: セルフサービスネットワーク should only appear on network node 1 because of creation prior to enabling VRRP.

On each network node, show the IP address of interfaces in the qrouter namespace. With the exception of the VRRP interface, only one namespace belonging to the master router instance contains IP addresses on the interfaces.

ネットワークノード 1:

# ip netns exec qrouter-b6206312-878e-497c-8ef7-eb384f8add96 ip addr show 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ha-eb820380-40@if21: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default qlen 1000 link/ether fa:16:3e:78:ba:99 brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet 169.254.192.1/18 brd 169.254.255.255 scope global ha-eb820380-40 valid_lft forever preferred_lft forever inet 169.254.0.1/24 scope global ha-eb820380-40 valid_lft forever preferred_lft forever inet6 fe80::f816:3eff:fe78:ba99/64 scope link valid_lft forever preferred_lft forever 3: qr-da3504ad-ba@if24: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default qlen 1000 link/ether fa:16:3e:dc:8e:a8 brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet 192.168.2.1/24 scope global qr-da3504ad-ba valid_lft forever preferred_lft forever inet6 fe80::f816:3eff:fedc:8ea8/64 scope link valid_lft forever preferred_lft forever 4: qr-442e36eb-fc@if27: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default qlen 1000 link/ether fa:16:3e:ee:c8:41 brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet6 fd00:192:168:2::1/64 scope global nodad valid_lft forever preferred_lft forever inet6 fe80::f816:3eff:feee:c841/64 scope link valid_lft forever preferred_lft forever 5: qg-33fedbc5-43@if28: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000 link/ether fa:16:3e:03:1a:f6 brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet 203.0.113.21/24 scope global qg-33fedbc5-43 valid_lft forever preferred_lft forever inet6 fd00:203:0:113::21/64 scope global nodad valid_lft forever preferred_lft forever inet6 fe80::f816:3eff:fe03:1af6/64 scope link valid_lft forever preferred_lft forever

ネットワークノード 2:

# ip netns exec qrouter-b6206312-878e-497c-8ef7-eb384f8add96 ip addr show 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ha-7a7ce184-36@if8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default qlen 1000 link/ether fa:16:3e:16:59:84 brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet 169.254.192.2/18 brd 169.254.255.255 scope global ha-7a7ce184-36 valid_lft forever preferred_lft forever inet6 fe80::f816:3eff:fe16:5984/64 scope link valid_lft forever preferred_lft forever 3: qr-da3504ad-ba@if11: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default qlen 1000 link/ether fa:16:3e:dc:8e:a8 brd ff:ff:ff:ff:ff:ff link-netnsid 0 4: qr-442e36eb-fc@if14: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default qlen 1000 5: qg-33fedbc5-43@if15: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000 link/ether fa:16:3e:03:1a:f6 brd ff:ff:ff:ff:ff:ff link-netnsid 0

注釈

The master router may reside on network node 2.

Launch an instance with an interface on the addtional self-service network. For example, a CirrOS image using flavor ID 1.

$ openstack server create --flavor 1 --image cirros --nic net-id=NETWORK_ID selfservice-instance2

Replace NETWORK_ID with the ID of the additional self-service network.

Determine the IPv4 and IPv6 addresses of the instance.

$ openstack server list +--------------------------------------+-----------------------+--------+---------------------------------------------------------------------------+ | ID | Name | Status | Networks | +--------------------------------------+-----------------------+--------+---------------------------------------------------------------------------+ | bde64b00-77ae-41b9-b19a-cd8e378d9f8b | selfservice-instance2 | ACTIVE | selfservice2=fd00:192:168:2:f816:3eff:fe71:e93e, 192.168.2.4 | +--------------------------------------+-----------------------+--------+---------------------------------------------------------------------------+

Create a floating IPv4 address on the provider network.

$ openstack ip floating create provider1 +-------------+--------------------------------------+ | Field | Value | +-------------+--------------------------------------+ | fixed_ip | None | | id | 0174056a-fa56-4403-b1ea-b5151a31191f | | instance_id | None | | ip | 203.0.113.17 | | pool | provider1 | +-------------+--------------------------------------+

Associate the floating IPv4 address with the instance.

$ openstack ip floating add 203.0.113.17 selfservice-instance2

注釈

このコマンドは何も出力しません。

Verify failover operation¶

- Begin a continuous ping of both the floating IPv4 address and IPv6 address of the instance. While performing the next three steps, you should see a minimal, if any, interruption of connectivity to the instance.

- On the network node with the master router, administratively disable the overlay network interface.

- On the other network node, verify promotion of the backup router to master router by noting addition of IP addresses to the interfaces in the qrouter namespace.

- On the original network node in step 2, administratively enable the overlay network interface. Note that the master router remains on the network node in step 3.

Network traffic flow¶

This high-availability mechanism simply augments Open vSwitch: Self-service networks with failover of layer-3 services to another router if the master router fails. Thus, you can reference Self-service network traffic flow for normal operation.

Except where otherwise noted, this document is licensed under Creative Commons Attribution 3.0 License. See all OpenStack Legal Documents.