Hitachi NAS (HNAS) driver¶

The HNAS driver provides NFS Shared File Systems to OpenStack.

Requirements¶

- Hitachi NAS Platform Models 3080, 3090, 4040, 4060, 4080, and 4100.

- HNAS/SMU software version is 12.2 or higher.

- HNAS configuration and management utilities to create a storage pool (span)

and an EVS.

- GUI (SMU).

- SSC CLI.

Driver options¶

This table contains the configuration options specific to the share driver.

| Configuration option = Default value | Description |

|---|---|

| [DEFAULT] | |

hitachi_hnas_allow_cifs_snapshot_while_mounted = False |

(Boolean) By default, CIFS snapshots are not allowed to be taken when the share has clients connected because consistent point-in-time replica cannot be guaranteed for all files. Enabling this might cause inconsistent snapshots on CIFS shares. |

hitachi_hnas_cluster_admin_ip0 = None |

(String) The IP of the clusters admin node. Only set in HNAS multinode clusters. |

hitachi_hnas_driver_helper = manila.share.drivers.hitachi.hnas.ssh.HNASSSHBackend |

(String) Python class to be used for driver helper. |

hitachi_hnas_evs_id = None |

(Integer) Specify which EVS this backend is assigned to. |

hitachi_hnas_evs_ip = None |

(String) Specify IP for mounting shares. |

hitachi_hnas_file_system_name = None |

(String) Specify file-system name for creating shares. |

hitachi_hnas_ip = None |

(String) HNAS management interface IP for communication between Manila controller and HNAS. |

hitachi_hnas_password = None |

(String) HNAS user password. Required only if private key is not provided. |

hitachi_hnas_ssh_private_key = None |

(String) RSA/DSA private key value used to connect into HNAS. Required only if password is not provided. |

hitachi_hnas_stalled_job_timeout = 30 |

(Integer) The time (in seconds) to wait for stalled HNAS jobs before aborting. |

hitachi_hnas_user = None |

(String) HNAS username Base64 String in order to perform tasks such as create file-systems and network interfaces. |

| [hnas1] | |

share_backend_name = None |

(String) The backend name for a given driver implementation. |

share_driver = manila.share.drivers.generic.GenericShareDriver |

(String) Driver to use for share creation. |

Pre-configuration on OpenStack deployment¶

Install the OpenStack environment with manila. See the OpenStack installation guide.

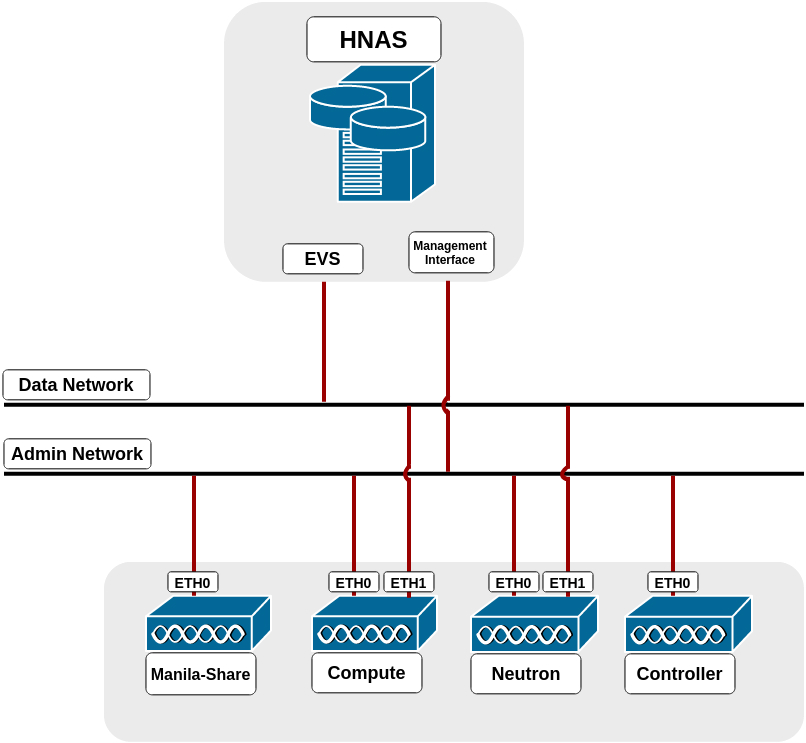

Configure the OpenStack networking so it can reach HNAS Management interface and HNAS EVS Data interface.

Note

In the driver mode used by HNAS Driver (DHSS =

False), the driver does not handle network configuration, it is up to the administrator to configure it.Configure the network of the manila-share node network to reach HNAS management interface through the admin network.

Note

The manila-share node only requires the HNAS EVS data interface if you plan to use share migration.

Configure the network of the Compute and Networking nodes to reach HNAS EVS data interface through the data network.

Example of networking architecture:

Edit the

/etc/neutron/plugins/ml2/ml2_conf.inifile and update the following settings in their respective tags. In case you use linuxbridge, update bridge mappings at linuxbridge section:

Important

It is mandatory that HNAS management interface is reachable from the Shared File System node through the admin network, while the selected EVS data interface is reachable from OpenStack Cloud, such as through Neutron flat networking.

[ml2] type_drivers = flat,vlan,vxlan,gre mechanism_drivers = openvswitch [ml2_type_flat] flat_networks = physnet1,physnet2 [ml2_type_vlan] network_vlan_ranges = physnet1:1000:1500,physnet2:2000:2500 [ovs] bridge_mappings = physnet1:br-ex,physnet2:br-eth1

You may have to repeat the last line above in another file on the Compute node, if it exists it is located in:

/etc/neutron/plugins/openvswitch/ovs_neutron_plugin.ini.In case openvswitch for neutron agent, run in network node:

# ifconfig eth1 0 # ovs-vsctl add-br br-eth1 # ovs-vsctl add-port br-eth1 eth1 # ifconfig eth1 up

Restart all neutron processes.

Create the data HNAS network in OpenStack:

List the available projects:

$ openstack project listCreate a network to the given project (DEMO), providing the project name, a name for the network, the name of the physical network over which the virtual network is implemented, and the type of the physical mechanism by which the virtual network is implemented:

$ openstack network create --project DEMO --provider-network-type flat \ --provider-physical-network physnet2 hnas_network

Optional - List available networks:

$ openstack network listCreate a subnet to the same porject (DEMO), the gateway IP of this subnet, a name for the subnet, the network name created before, and the CIDR of subnet:

$ openstack subnet create --project DEMO --gateway GATEWAY \ --subnet-range SUBNET_CIDR --network NETWORK HNAS_SUBNET

OPTIONAL - List available subnets:

$ openstack subnet listAdd the subnet interface to a router, providing the router name and subnet name created before:

$ openstack router add subnet SUBNET ROUTER

Pre-configuration on HNAS¶

Create a file system on HNAS. See the Hitachi HNAS reference.

Important

Make sure that the filesystem is not created as a replication target. Refer official HNAS administration guide.

Prepare the HNAS EVS network.

Create a route in HNAS to the project network:

$ console-context --evs <EVS_ID_IN_USE> route-net-add --gateway <FLAT_NETWORK_GATEWAY> \ <TENANT_PRIVATE_NETWORK>

Important

Make sure multi-tenancy is enabled and routes are configured per EVS.

$ console-context --evs 3 route-net-add --gateway 192.168.1.1 \ 10.0.0.0/24

Back end configuration¶

Configure HNAS driver.

Configure HNAS driver according to your environment. This example shows a minimal HNAS driver configuration:

[DEFAULT] enabled_share_backends = hnas1 enabled_share_protocols = NFS [hnas1] share_backend_name = HNAS1 share_driver = manila.share.drivers.hitachi.hds_hnas.HDSHNASDriver driver_handles_share_servers = False hds_hnas_ip = 172.24.44.15 hds_hnas_user = supervisor hds_hnas_password = supervisor hds_hnas_evs_id = 1 hds_hnas_evs_ip = 10.0.1.20 hds_hnas_file_system_name = FS-Manila

Optional - HNAS multi-backend configuration.

Update the

enabled_share_backendsflag with the names of the back ends separated by commas.Add a section for every back end according to the example bellow:

[DEFAULT] enabled_share_backends = hnas1,hnas2 enabled_share_protocols = NFS [hnas1] share_backend_name = HNAS1 share_driver = manila.share.drivers.hitachi.hds_hnas.HDSHNASDriver driver_handles_share_servers = False hds_hnas_ip = 172.24.44.15 hds_hnas_user = supervisor hds_hnas_password = supervisor hds_hnas_evs_id = 1 hds_hnas_evs_ip = 10.0.1.20 hds_hnas_file_system_name = FS-Manila1 [hnas2] share_backend_name = HNAS2 share_driver = manila.share.drivers.hitachi.hds_hnas.HDSHNASDriver driver_handles_share_servers = False hds_hnas_ip = 172.24.44.15 hds_hnas_user = supervisor hds_hnas_password = supervisor hds_hnas_evs_id = 1 hds_hnas_evs_ip = 10.0.1.20 hds_hnas_file_system_name = FS-Manila2

Disable DHSS for HNAS share type configuration:

Note

Shared File Systems requires that the share type includes the

driver_handles_share_serversextra-spec. This ensures that the share will be created on a backend that supports the requesteddriver_handles_share_serverscapability.$ manila type-create hitachi False(Optional multiple back end) Create an extra-spec for specifying which HNAS back end will be created by the share:

Create additional share types.

$ manila type-create hitachi2 FalseAdd an extra-spec for each share-type in order to match a specific back end. Therefore, it is possible to specify which back end the Shared File System service will use when creating a share.

$ manila type-key hitachi set share_backend_name=hnas1 $ manila type-key hitachi2 set share_backend_name=hnas2

Restart all Shared File Systems services (

manila-share,manila-schedulerandmanila-api).

Additional notes¶

- HNAS has some restrictions about the number of EVSs, filesystems, virtual-volumes, and simultaneous SSC connections. Check the manual specification for your system.

- Shares and snapshots are thin provisioned. It is reported to Shared File System only the real used space in HNAS. Also, a snapshot does not initially take any space in HNAS, it only stores the difference between the share and the snapshot, so it grows when share data is changed.

- Administrators should manage the project’s quota (manila quota-update) to control the back-end usage.

Except where otherwise noted, this document is licensed under Creative Commons Attribution 3.0 License. See all OpenStack Legal Documents.