Appendix G: Container networking¶

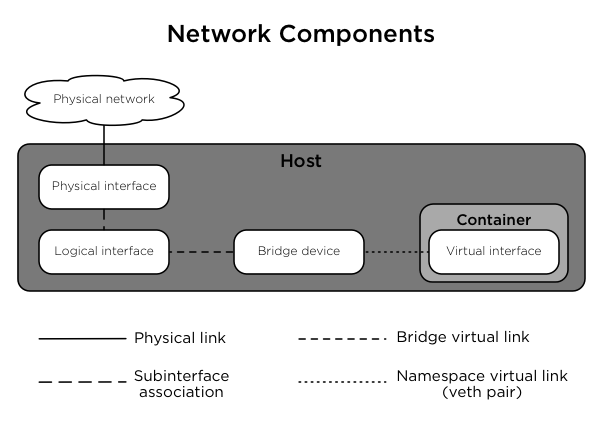

OpenStack-Ansible deploys Linux containers (LXC) and uses Linux bridging between the container and the host interfaces to ensure that all traffic from containers flows over multiple host interfaces. This appendix describes how the interfaces are connected and how traffic flows.

For more information about how the OpenStack Networking service (neutron) uses the interfaces for instance traffic, please see the OpenStack Networking Guide.

Bonded network interfaces¶

In a typical production environment, physical network interfaces are combined in bonded pairs for better redundancy and throughput. Avoid using two ports on the same multiport network card for the same bonded interface, because a network card failure affects both of the physical network interfaces used by the bond.

Linux bridges¶

The combination of containers and flexible deployment options requires implementation of advanced Linux networking features, such as bridges and namespaces.

Bridges provide layer 2 connectivity (similar to switches) among physical, logical, and virtual network interfaces within a host. After a bridge is created, the network interfaces are virtually plugged in to it.

OpenStack-Ansible uses bridges to connect physical and logical network interfaces on the host to virtual network interfaces within containers.

Namespaces provide logically separate layer 3 environments (similar to routers) within a host. Namespaces use virtual interfaces to connect with other namespaces, including the host namespace. These interfaces, often called

vethpairs, are virtually plugged in between namespaces similar to patch cables connecting physical devices such as switches and routers.Each container has a namespace that connects to the host namespace with one or more

vethpairs. Unless specified, the system generates random names forvethpairs.

The following image demonstrates how the container network interfaces are connected to the host’s bridges and physical network interfaces:

Network diagrams¶

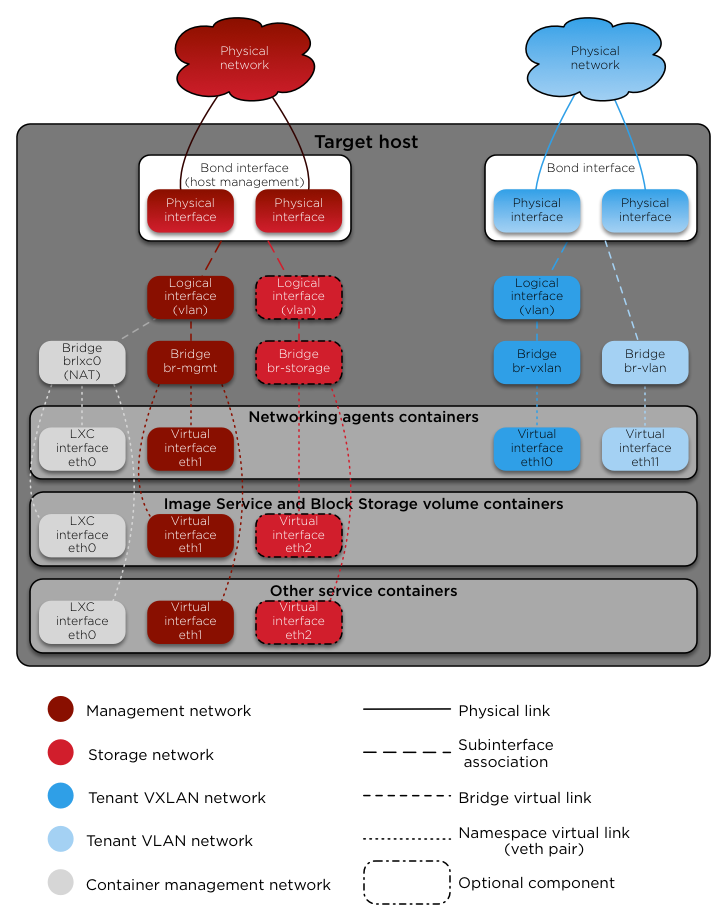

Hosts with services running in containers¶

The following diagram shows how all of the interfaces and bridges interconnect to provide network connectivity to the OpenStack deployment:

The interface lxcbr0 provides connectivity for the containers to the

outside world, thanks to dnsmasq (dhcp/dns) + NAT.

Note

If you require additional network configuration for your container interfaces

(like changing the routes on eth1 for routes on the management network),

please adapt your openstack_user_config.yml file.

See Reference for openstack_user_config settings for more details.

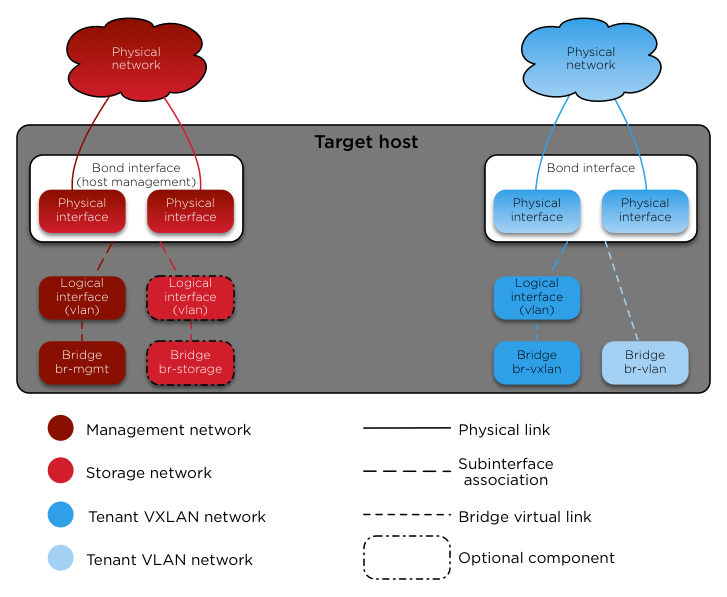

Services running “on metal” (deploying directly on the physical hosts)¶

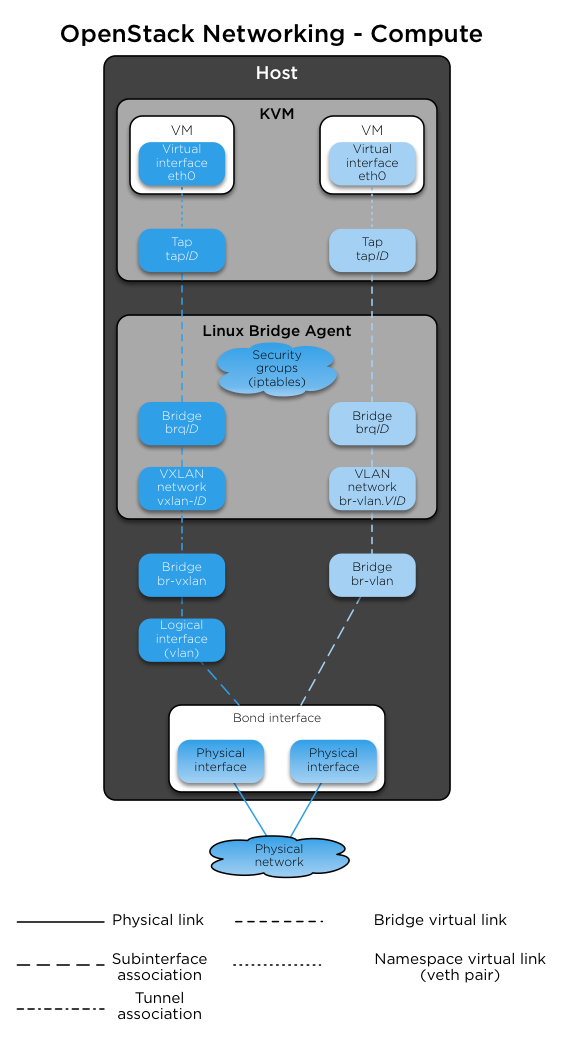

OpenStack-Ansible deploys the Compute service on the physical host rather than in a container. The following diagram shows how to use bridges for network connectivity:

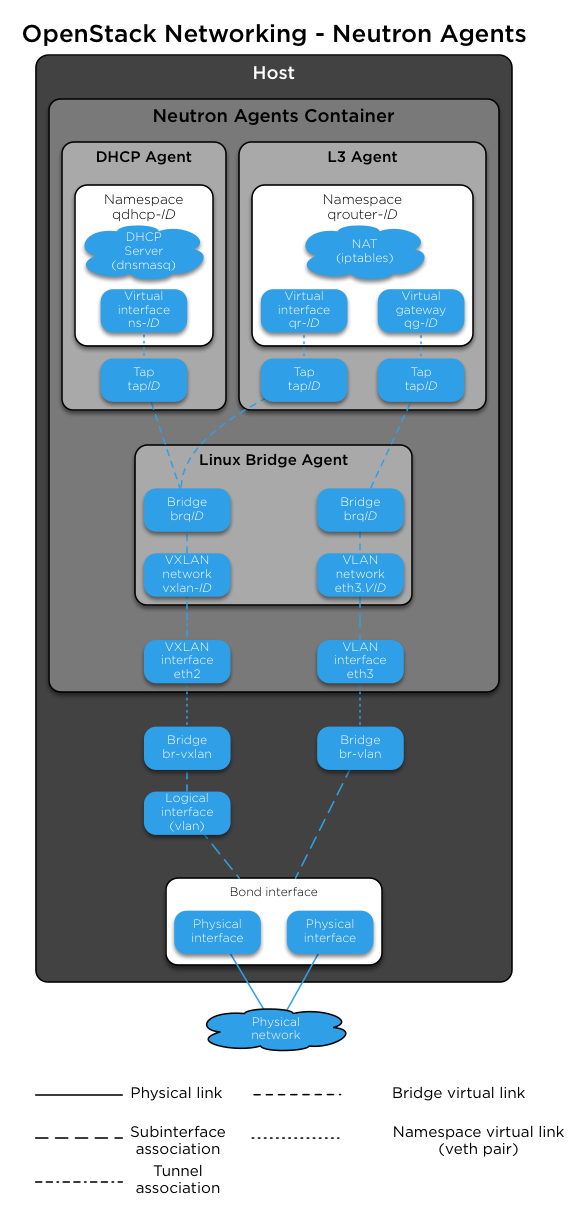

Neutron traffic¶

The following diagram shows how the Networking service (neutron) agents

work with the br-vlan and br-vxlan bridges. Neutron is configured to

use a DHCP agent, an L3 agent, and a Linux Bridge agent within a

networking-agents container. The diagram shows how DHCP agents provide

information (IP addresses and DNS servers) to the instances, and how routing

works on the image.

The following diagram shows how virtual machines connect to the br-vlan and

br-vxlan bridges and send traffic to the network outside the host:

Reference for openstack_user_config settings¶

The openstack_user_config.yml.example file is heavily commented with the

details of how to do more advanced container networking configuration. The

contents of the file are shown here for reference.

#

# Overview

# ========

#

# This file contains the configuration for OpenStack Ansible Deployment

# (OSA) core services. Optional service configuration resides in the

# conf.d directory.

#

# You can customize the options in this file and copy it to

# /etc/openstack_deploy/openstack_user_config.yml or create a new

# file containing only necessary options for your environment

# before deployment.

#

# OSA implements PyYAML to parse YAML files and therefore supports structure

# and formatting options that augment traditional YAML. For example, aliases

# or references. For more information on PyYAML, see the documentation at

#

# http://pyyaml.org/wiki/PyYAMLDocumentation

#

# Configuration reference

# =======================

#

# Level: cidr_networks (required)

# Contains an arbitrary list of networks for the deployment. For each network,

# the inventory generator uses the IP address range to create a pool of IP

# addresses for network interfaces inside containers. A deployment requires

# at least one network for management.

#

# Option: <value> (required, string)

# Name of network and IP address range in CIDR notation. This IP address

# range coincides with the IP address range of the bridge for this network

# on the target host.

#

# Example:

#

# Define networks for a typical deployment.

#

# - Management network on 172.29.236.0/22. Control plane for infrastructure

# services, OpenStack APIs, and horizon.

# - Tunnel network on 172.29.240.0/22. Data plane for project (tenant) VXLAN

# networks.

# - Storage network on 172.29.244.0/22. Data plane for storage services such

# as cinder and swift.

#

# cidr_networks:

# container: 172.29.236.0/22

# tunnel: 172.29.240.0/22

# storage: 172.29.244.0/22

#

# Example:

#

# Define additional service network on 172.29.248.0/22 for deployment in a

# Rackspace data center.

#

# snet: 172.29.248.0/22

#

# --------

#

# Level: used_ips (optional)

# For each network in the 'cidr_networks' level, specify a list of IP addresses

# or a range of IP addresses that the inventory generator should exclude from

# the pools of IP addresses for network interfaces inside containers. To use a

# range, specify the lower and upper IP addresses (inclusive) with a comma

# separator.

#

# Example:

#

# The management network includes a router (gateway) on 172.29.236.1 and

# DNS servers on 172.29.236.11-12. The deployment includes seven target

# servers on 172.29.236.101-103, 172.29.236.111, 172.29.236.121, and

# 172.29.236.131. However, the inventory generator automatically excludes

# these IP addresses. The deployment host itself isn't automatically

# excluded. Network policy at this particular example organization

# also reserves 231-254 in the last octet at the high end of the range for

# network device management.

#

# used_ips:

# - 172.29.236.1

# - "172.29.236.100,172.29.236.200"

# - "172.29.240.100,172.29.240.200"

# - "172.29.244.100,172.29.244.200"

#

# --------

#

# Level: global_overrides (required)

# Contains global options that require customization for a deployment. For

# example, load balancer virtual IP addresses (VIP). This level also provides

# a mechanism to override other options defined in the playbook structure.

#

# Option: internal_lb_vip_address (required, string)

# Load balancer VIP for the following items:

#

# - Local package repository

# - Galera SQL database cluster

# - Administrative and internal API endpoints for all OpenStack services

# - Glance registry

# - Nova compute source of images

# - Cinder source of images

# - Instance metadata

#

# Option: external_lb_vip_address (required, string)

# Load balancer VIP for the following items:

#

# - Public API endpoints for all OpenStack services

# - Horizon

#

# Option: management_bridge (required, string)

# Name of management network bridge on target hosts. Typically 'br-mgmt'.

#

# Option: tunnel_bridge (optional, string)

# Name of tunnel network bridge on target hosts. Typically 'br-vxlan'.

#

# Level: provider_networks (required)

# List of container and bare metal networks on target hosts.

#

# Level: network (required)

# Defines a container or bare metal network. Create a level for each

# network.

#

# Option: type (required, string)

# Type of network. Networks other than those for neutron such as

# management and storage typically use 'raw'. Neutron networks use

# 'flat', 'vlan', or 'vxlan'. Coincides with ML2 plug-in configuration

# options.

#

# Option: container_bridge (required, string)

# Name of unique bridge on target hosts to use for this network. Typical

# values include 'br-mgmt', 'br-storage', 'br-vlan', 'br-vxlan', etc.

#

# Option: container_interface (required, string)

# Name of unique interface in containers to use for this network.

# Typical values include 'eth1', 'eth2', etc.

# NOTE: Container interface is different from host interfaces.

#

# Option: container_type (required, string)

# Name of mechanism that connects interfaces in containers to the bridge

# on target hosts for this network. Typically 'veth'.

#

# Option: host_bind_override (optional, string)

# Name of the physical network interface on the same L2 network being

# used with the br-vlan device. This host_bind_override should only

# be set for the ' container_bridge: "br-vlan" '.

# This interface is optional but highly recommended for vlan based

# OpenStack networking.

# If no additional network interface is available, a deployer can create

# a veth pair, and plug it into the the br-vlan bridge to provide

# this interface. An example could be found in the aio_interfaces.cfg

# file.

#

# Option: container_mtu (optional, string)

# Sets the MTU within LXC for a given network type.

#

# Option: ip_from_q (optional, string)

# Name of network in 'cidr_networks' level to use for IP address pool. Only

# valid for 'raw' and 'vxlan' types.

#

# Option: address_prefix (option, string)

# Override for the prefix of the key added to each host that contains IP

# address information for this network. By default, this will be the name

# given in 'ip_from_q' with a fallback of the name of the interface given in

# 'container_interface'.

# (e.g., 'ip_from_q'_address and 'container_interface'_address)

#

# Option: is_container_address (required, boolean)

# If true, the load balancer uses this IP address to access services

# in the container. Only valid for networks with 'ip_from_q' option.

#

# Option: is_ssh_address (required, boolean)

# If true, Ansible uses this IP address to access the container via SSH.

# Only valid for networks with 'ip_from_q' option.

#

# Option: group_binds (required, string)

# List of one or more Ansible groups that contain this

# network. For more information, see the env.d YAML files.

#

# Option: reference_group (optional, string)

# An Ansible group that a host must be a member of, in addition to any of the

# groups within 'group_binds', for this network to apply.

#

# Option: net_name (optional, string)

# Name of network for 'flat' or 'vlan' types. Only valid for these

# types. Coincides with ML2 plug-in configuration options.

#

# Option: range (optional, string)

# For 'vxlan' type neutron networks, range of VXLAN network identifiers

# (VNI). For 'vlan' type neutron networks, range of VLAN tags. Supports

# more than one range of VLANs on a particular network. Coincides with

# ML2 plug-in configuration options.

#

# Option: static_routes (optional, list)

# List of additional routes to give to the container interface.

# Each item is composed of cidr and gateway. The items will be

# translated into the container network interfaces configuration

# as a `post-up ip route add <cidr> via <gateway> || true`.

#

# Option: gateway (optional, string)

# String containing the IP of the default gateway used by the

# container. Generally not needed: the containers will have

# their default gateway set with dnsmasq, poitining to the host

# which does natting for container connectivity.

#

# Example:

#

# Define a typical network architecture:

#

# - Network of type 'raw' that uses the 'br-mgmt' bridge and 'management'

# IP address pool. Maps to the 'eth1' device in containers. Applies to all

# containers and bare metal hosts. Both the load balancer and Ansible

# use this network to access containers and services.

# - Network of type 'raw' that uses the 'br-storage' bridge and 'storage'

# IP address pool. Maps to the 'eth2' device in containers. Applies to

# nova compute and all storage service containers. Optionally applies to

# to the swift proxy service.

# - Network of type 'vxlan' that contains neutron VXLAN tenant networks

# 1 to 1000 and uses 'br-vxlan' bridge on target hosts. Maps to the

# 'eth10' device in containers. Applies to all neutron agent containers

# and neutron agents on bare metal hosts.

# - Network of type 'vlan' that contains neutron VLAN networks 101 to 200

# and 301 to 400 and uses the 'br-vlan' bridge on target hosts. Maps to

# the 'eth11' device in containers. Applies to all neutron agent

# containers and neutron agents on bare metal hosts.

# - Network of type 'flat' that contains one neutron flat network and uses

# the 'br-vlan' bridge on target hosts. Maps to the 'eth12' device in

# containers. Applies to all neutron agent containers and neutron agents

# on bare metal hosts.

#

# Note: A pair of 'vlan' and 'flat' networks can use the same bridge because

# one only handles tagged frames and the other only handles untagged frames

# (the native VLAN in some parlance). However, additional 'vlan' or 'flat'

# networks require additional bridges.

#

# provider_networks:

# - network:

# group_binds:

# - all_containers

# - hosts

# type: "raw"

# container_bridge: "br-mgmt"

# container_interface: "eth1"

# container_type: "veth"

# ip_from_q: "container"

# is_container_address: true

# is_ssh_address: true

# - network:

# group_binds:

# - glance_api

# - cinder_api

# - cinder_volume

# - nova_compute

# # Uncomment the next line if using swift with a storage network.

# # - swift_proxy

# type: "raw"

# container_bridge: "br-storage"

# container_type: "veth"

# container_interface: "eth2"

# container_mtu: "9000"

# ip_from_q: "storage"

# - network:

# group_binds:

# - neutron_linuxbridge_agent

# container_bridge: "br-vxlan"

# container_type: "veth"

# container_interface: "eth10"

# container_mtu: "9000"

# ip_from_q: "tunnel"

# type: "vxlan"

# range: "1:1000"

# net_name: "vxlan"

# - network:

# group_binds:

# - neutron_linuxbridge_agent

# container_bridge: "br-vlan"

# container_type: "veth"

# container_interface: "eth11"

# type: "vlan"

# range: "101:200,301:400"

# net_name: "vlan"

# - network:

# group_binds:

# - neutron_linuxbridge_agent

# container_bridge: "br-vlan"

# container_type: "veth"

# container_interface: "eth12"

# host_bind_override: "eth12"

# type: "flat"

# net_name: "flat"

#

# --------

#

# Level: shared-infra_hosts (required)

# List of target hosts on which to deploy shared infrastructure services

# including the Galera SQL database cluster, RabbitMQ, and Memcached. Recommend

# three minimum target hosts for these services.

#

# Level: <value> (required, string)

# Hostname of a target host.

#

# Option: ip (required, string)

# IP address of this target host, typically the IP address assigned to

# the management bridge.

#

# Example:

#

# Define three shared infrastructure hosts:

#

# shared-infra_hosts:

# infra1:

# ip: 172.29.236.101

# infra2:

# ip: 172.29.236.102

# infra3:

# ip: 172.29.236.103

#

# --------

#

# Level: repo-infra_hosts (required)

# List of target hosts on which to deploy the package repository. Recommend

# minimum three target hosts for this service. Typically contains the same

# target hosts as the 'shared-infra_hosts' level.

#

# Level: <value> (required, string)

# Hostname of a target host.

#

# Option: ip (required, string)

# IP address of this target host, typically the IP address assigned to

# the management bridge.

#

# Example:

#

# Define three package repository hosts:

#

# repo-infra_hosts:

# infra1:

# ip: 172.29.236.101

# infra2:

# ip: 172.29.236.102

# infra3:

# ip: 172.29.236.103

#

# --------

#

# Level: os-infra_hosts (required)

# List of target hosts on which to deploy the glance API, nova API, heat API,

# and horizon. Recommend three minimum target hosts for these services.

# Typically contains the same target hosts as 'shared-infra_hosts' level.

#

# Level: <value> (required, string)

# Hostname of a target host.

#

# Option: ip (required, string)

# IP address of this target host, typically the IP address assigned to

# the management bridge.

#

# Example:

#

# Define three OpenStack infrastructure hosts:

#

# os-infra_hosts:

# infra1:

# ip: 172.29.236.101

# infra2:

# ip: 172.29.236.102

# infra3:

# ip: 172.29.236.103

#

# --------

#

# Level: identity_hosts (required)

# List of target hosts on which to deploy the keystone service. Recommend

# three minimum target hosts for this service. Typically contains the same

# target hosts as the 'shared-infra_hosts' level.

#

# Level: <value> (required, string)

# Hostname of a target host.

#

# Option: ip (required, string)

# IP address of this target host, typically the IP address assigned to

# the management bridge.

#

# Example:

#

# Define three OpenStack identity hosts:

#

# identity_hosts:

# infra1:

# ip: 172.29.236.101

# infra2:

# ip: 172.29.236.102

# infra3:

# ip: 172.29.236.103

#

# --------

#

# Level: network_hosts (required)

# List of target hosts on which to deploy neutron services. Recommend three

# minimum target hosts for this service. Typically contains the same target

# hosts as the 'shared-infra_hosts' level.

#

# Level: <value> (required, string)

# Hostname of a target host.

#

# Option: ip (required, string)

# IP address of this target host, typically the IP address assigned to

# the management bridge.

#

# Example:

#

# Define three OpenStack network hosts:

#

# network_hosts:

# infra1:

# ip: 172.29.236.101

# infra2:

# ip: 172.29.236.102

# infra3:

# ip: 172.29.236.103

#

# --------

#

# Level: compute_hosts (optional)

# List of target hosts on which to deploy the nova compute service. Recommend

# one minimum target host for this service. Typically contains target hosts

# that do not reside in other levels.

#

# Level: <value> (required, string)

# Hostname of a target host.

#

# Option: ip (required, string)

# IP address of this target host, typically the IP address assigned to

# the management bridge.

#

# Example:

#

# Define an OpenStack compute host:

#

# compute_hosts:

# compute1:

# ip: 172.29.236.121

#

# --------

#

# Level: ironic-compute_hosts (optional)

# List of target hosts on which to deploy the nova compute service for Ironic.

# Recommend one minimum target host for this service. Typically contains target

# hosts that do not reside in other levels.

#

# Level: <value> (required, string)

# Hostname of a target host.

#

# Option: ip (required, string)

# IP address of this target host, typically the IP address assigned to

# the management bridge.

#

# Example:

#

# Define an OpenStack compute host:

#

# ironic-compute_hosts:

# ironic-infra1:

# ip: 172.29.236.121

#

# --------

#

# Level: storage-infra_hosts (required)

# List of target hosts on which to deploy the cinder API. Recommend three

# minimum target hosts for this service. Typically contains the same target

# hosts as the 'shared-infra_hosts' level.

#

# Level: <value> (required, string)

# Hostname of a target host.

#

# Option: ip (required, string)

# IP address of this target host, typically the IP address assigned to

# the management bridge.

#

# Example:

#

# Define three OpenStack storage infrastructure hosts:

#

# storage-infra_hosts:

# infra1:

# ip: 172.29.236.101

# infra2:

# ip: 172.29.236.102

# infra3:

# ip: 172.29.236.103

#

# --------

#

# Level: storage_hosts (required)

# List of target hosts on which to deploy the cinder volume service. Recommend

# one minimum target host for this service. Typically contains target hosts

# that do not reside in other levels.

#

# Level: <value> (required, string)

# Hostname of a target host.

#

# Option: ip (required, string)

# IP address of this target host, typically the IP address assigned to

# the management bridge.

#

# Level: container_vars (required)

# Contains storage options for this target host.

#

# Option: cinder_storage_availability_zone (optional, string)

# Cinder availability zone.

#

# Option: cinder_default_availability_zone (optional, string)

# If the deployment contains more than one cinder availability zone,

# specify a default availability zone.

#

# Level: cinder_backends (required)

# Contains cinder backends.

#

# Option: limit_container_types (optional, string)

# Container name string in which to apply these options. Typically

# any container with 'cinder_volume' in the name.

#

# Level: <value> (required, string)

# Arbitrary name of the backend. Each backend contains one or more

# options for the particular backend driver. The template for the

# cinder.conf file can generate configuration for any backend

# providing that it includes the necessary driver options.

#

# Option: volume_backend_name (required, string)

# Name of backend, arbitrary.

#

# The following options apply to the LVM backend driver:

#

# Option: volume_driver (required, string)

# Name of volume driver, typically

# 'cinder.volume.drivers.lvm.LVMVolumeDriver'.

#

# Option: volume_group (required, string)

# Name of LVM volume group, typically 'cinder-volumes'.

#

# The following options apply to the NFS backend driver:

#

# Option: volume_driver (required, string)

# Name of volume driver,

# 'cinder.volume.drivers.nfs.NfsDriver'.

# NB. When using NFS driver you may want to adjust your

# env.d/cinder.yml file to run cinder-volumes in containers.

#

# Option: nfs_shares_config (optional, string)

# File containing list of NFS shares available to cinder, typically

# '/etc/cinder/nfs_shares'.

#

# Option: nfs_mount_point_base (optional, string)

# Location in which to mount NFS shares, typically

# '$state_path/mnt'.

#

# Option: nfs_mount_options (optional, string)

# Mount options used for the NFS mount points.

#

# Option: shares (required)

# List of shares to populate the 'nfs_shares_config' file. Each share

# uses the following format:

# - { ip: "{{ ip_nfs_server }}", share "/vol/cinder" }

#

# The following options apply to the NetApp backend driver:

#

# Option: volume_driver (required, string)

# Name of volume driver,

# 'cinder.volume.drivers.netapp.common.NetAppDriver'.

# NB. When using NetApp drivers you may want to adjust your

# env.d/cinder.yml file to run cinder-volumes in containers.

#

# Option: netapp_storage_family (required, string)

# Access method, typically 'ontap_7mode' or 'ontap_cluster'.

#

# Option: netapp_storage_protocol (required, string)

# Transport method, typically 'scsi' or 'nfs'. NFS transport also

# requires the 'nfs_shares_config' option.

#

# Option: nfs_shares_config (required, string)

# For NFS transport, name of the file containing shares. Typically

# '/etc/cinder/nfs_shares'.

#

# Option: netapp_server_hostname (required, string)

# NetApp server hostname.

#

# Option: netapp_server_port (required, integer)

# NetApp server port, typically 80 or 443.

#

# Option: netapp_login (required, string)

# NetApp server username.

#

# Option: netapp_password (required, string)

# NetApp server password.

#

# Example:

#

# Define an OpenStack storage host:

#

# storage_hosts:

# lvm-storage1:

# ip: 172.29.236.131

#

# Example:

#

# Use the LVM iSCSI backend in availability zone 'cinderAZ_1':

#

# container_vars:

# cinder_storage_availability_zone: cinderAZ_1

# cinder_default_availability_zone: cinderAZ_1

# cinder_backends:

# lvm:

# volume_backend_name: LVM_iSCSI

# volume_driver: cinder.volume.drivers.lvm.LVMVolumeDriver

# volume_group: cinder-volumes

# iscsi_ip_address: "{{ cinder_storage_address }}"

# limit_container_types: cinder_volume

#

# Example:

#

# Use the NetApp iSCSI backend via Data ONTAP 7-mode in availability zone

# 'cinderAZ_2':

#

# container_vars:

# cinder_storage_availability_zone: cinderAZ_2

# cinder_default_availability_zone: cinderAZ_1

# cinder_backends:

# netapp:

# volume_backend_name: NETAPP_iSCSI

# volume_driver: cinder.volume.drivers.netapp.common.NetAppDriver

# netapp_storage_family: ontap_7mode

# netapp_storage_protocol: iscsi

# netapp_server_hostname: hostname

# netapp_server_port: 443

# netapp_login: username

# netapp_password: password

#

# Example

#

# Use the QNAP iSCSI backend in availability zone

# 'cinderAZ_2':

#

# container_vars:

# cinder_storage_availability_zone: cinderAZ_2

# cinder_default_availability_zone: cinderAZ_1

# cinder_backends:

# limit_container_types: cinder_volume

# qnap:

# volume_backend_name: "QNAP 1 VOLUME"

# volume_driver: cinder.volume.drivers.qnap.QnapISCSIDriver

# qnap_management_url : http://10.10.10.5:8080

# qnap_poolname: "Storage Pool 1"

# qnap_storage_protocol: iscsi

# qnap_server_port: 8080

# iscsi_ip_address: 172.29.244.5

# san_login: username

# san_password: password

# san_thin_provision: True

#

#

# Example:

#

# Use the ceph RBD backend in availability zone 'cinderAZ_3':

#

# container_vars:

# cinder_storage_availability_zone: cinderAZ_3

# cinder_default_availability_zone: cinderAZ_1

# cinder_backends:

# limit_container_types: cinder_volume

# volumes_hdd:

# volume_driver: cinder.volume.drivers.rbd.RBDDriver

# rbd_pool: volumes_hdd

# rbd_ceph_conf: /etc/ceph/ceph.conf

# rbd_flatten_volume_from_snapshot: 'false'

# rbd_max_clone_depth: 5

# rbd_store_chunk_size: 4

# rados_connect_timeout: -1

# volume_backend_name: volumes_hdd

# rbd_user: "{{ cinder_ceph_client }}"

# rbd_secret_uuid: "{{ cinder_ceph_client_uuid }}"

#

#

# --------

#

# Level: log_hosts (required)

# List of target hosts on which to deploy logging services. Recommend

# one minimum target host for this service.

#

# Level: <value> (required, string)

# Hostname of a target host.

#

# Option: ip (required, string)

# IP address of this target host, typically the IP address assigned to

# the management bridge.

#

# Example:

#

# Define a logging host:

#

# log_hosts:

# log1:

# ip: 172.29.236.171

#

# --------

#

# Level: haproxy_hosts (optional)

# List of target hosts on which to deploy HAProxy. Recommend at least one

# target host for this service if hardware load balancers are not being

# used.

#

# Level: <value> (required, string)

# Hostname of a target host.

#

# Option: ip (required, string)

# IP address of this target host, typically the IP address assigned to

# the management bridge.

#

#

# Example:

#

# Define a virtual load balancer (HAProxy):

#

# While HAProxy can be used as a virtual load balancer, it is recommended to use

# a physical load balancer in a production environment.

#

# haproxy_hosts:

# lb1:

# ip: 172.29.236.100

# lb2:

# ip: 172.29.236.101

#

# In case of the above scenario(multiple hosts),HAProxy can be deployed in a

# highly-available manner by installing keepalived.

#

# To make keepalived work, edit at least the following variables

# in ``user_variables.yml``:

# haproxy_keepalived_external_vip_cidr: 192.168.0.4/25

# haproxy_keepalived_internal_vip_cidr: 172.29.236.54/16

# haproxy_keepalived_external_interface: br-flat

# haproxy_keepalived_internal_interface: br-mgmt

#

# To always deploy (or upgrade to) the latest stable version of keepalived.

# Edit the ``/etc/openstack_deploy/user_variables.yml``:

# keepalived_package_state: latest

#

# The group_vars/all/keepalived.yml contains the keepalived

# variables that are fed into the keepalived role during

# the haproxy playbook.

# You can change the keepalived behavior for your

# deployment. Refer to the ``user_variables.yml`` file for

# more information.

#

# Keepalived pings a public IP address to check its status. The default

# address is ``193.0.14.129``. To change this default,

# set the ``keepalived_ping_address`` variable in the

# ``user_variables.yml`` file.

Except where otherwise noted, this document is licensed under Creative Commons Attribution 3.0 License. See all OpenStack Legal Documents.