仮想化層のセキュリティ強化¶

In the beginning of this chapter we discuss the use of both physical and virtual hardware by instances, the associated security risks, and some recommendations for mitigating those risks. Then we discuss how the Secure Encrypted Virtualization technology can be used to encrypt the memory of VMs on AMD-based machines which support the technology. We conclude the chapter with a discussion of sVirt, an open source project for integrating SELinux mandatory access controls with the virtualization components.

物理ハードウェア (PCI パススルー)¶

多くのハイパーバイザーは PCI パススルーとして知られる機能を提供します。これにより、インスタンスがノードにあるハードウェアの一部に直接アクセスできます。たとえば、インスタンスがハイパフォーマンスコンピューティング用の compute unified device architecture (CUDA) を提供するビデオカードや GPU にアクセスするために使用されます。この機能は 2 種類のセキュリティリスクをもたらします。ダイレクトメモリアクセスとハードウェア感染です。

Direct memory access (DMA) is a feature that permits certain hardware devices to access arbitrary physical memory addresses in the host computer. Often video cards have this capability. However, an instance should not be given arbitrary physical memory access because this would give it full view of both the host system and other instances running on the same node. Hardware vendors use an input/output memory management unit (IOMMU) to manage DMA access in these situations. We recommend cloud architects should ensure that the hypervisor is configured to utilize this hardware feature.

注釈

IOMMU 機能は、Intel により VT-d、AMD により AMD-Vi として提供されています。

A hardware infection occurs when an instance makes a malicious modification to the firmware or some other part of a device. As this device is used by other instances or the host OS, the malicious code can spread into those systems. The end result is that one instance can run code outside of its security domain. This is a significant breach as it is harder to reset the state of physical hardware than virtual hardware, and can lead to additional exposure such as access to the management network.

Solutions to the hardware infection problem are domain specific. The strategy is to identify how an instance can modify hardware state then determine how to reset any modifications when the instance is done using the hardware. For example, one option could be to re-flash the firmware after use. There is a need to balance hardware longevity with security as some firmwares will fail after a large number of writes. TPM technology, described in セキュアブートストラップ, is a solution for detecting unauthorized firmware changes. Regardless of the strategy selected, it is important to understand the risks associated with this kind of hardware sharing so that they can be properly mitigated for a given deployment scenario.

Due to the risk and complexities associated with PCI passthrough, it should be disabled by default. If enabled for a specific need, you will need to have appropriate processes in place to ensure the hardware is clean before re-issue.

仮想ハードウェア (QEMU)¶

When running a virtual machine, virtual hardware is a software layer that provides the hardware interface for the virtual machine. Instances use this functionality to provide network, storage, video, and other devices that may be needed. With this in mind, most instances in your environment will exclusively use virtual hardware, with a minority that will require direct hardware access. The major open source hypervisors use QEMU for this functionality. While QEMU fills an important need for virtualization platforms, it has proven to be a very challenging software project to write and maintain. Much of the functionality in QEMU is implemented with low-level code that is difficult for most developers to comprehend. The hardware virtualized by QEMU includes many legacy devices that have their own set of quirks. Putting all of this together, QEMU has been the source of many security problems, including hypervisor breakout attacks.

It is important to take proactive steps to harden QEMU. We recommend three specific steps:

Minimizing the code base.

Using compiler hardening.

Using mandatory access controls such as sVirt, SELinux, or AppArmor.

Ensure your iptables have the default policy filtering network traffic, and consider examining the existing rule set to understand each rule and determine if the policy needs to be expanded upon.

QEMU コードベースの最小化¶

We recommend minimizing the QEMU code base by removing unused components from the system. QEMU provides support for many different virtual hardware devices, however only a small number of devices are needed for a given instance. The most common hardware devices are the virtio devices. Some legacy instances will need access to specific hardware, which can be specified using glance metadata:

$ glance image-update \

--property hw_disk_bus=ide \

--property hw_cdrom_bus=ide \

--property hw_vif_model=e1000 \

f16-x86_64-openstack-sda

A cloud architect should decide what devices to make available to cloud users. Anything that is not needed should be removed from QEMU. This step requires recompiling QEMU after modifying the options passed to the QEMU configure script. For a complete list of up-to-date options simply run ./configure --help from within the QEMU source directory. Decide what is needed for your deployment, and disable the remaining options.

コンパイラーのセキュリティ強化機能¶

Harden QEMU using compiler hardening options. Modern compilers provide a variety of compile time options to improve the security of the resulting binaries. These features include relocation read-only (RELRO), stack canaries, never execute (NX), position independent executable (PIE), and address space layout randomization (ASLR).

Many modern Linux distributions already build QEMU with compiler hardening enabled, we recommend verifying your existing executable before proceeding. One tool that can assist you with this verification is called checksec.sh

- RELocation Read-Only (RELRO)

実行ファイルのデータ部分をセキュリティ強化します。全体 RELRO モードと部分 RELRO モードが gcc によりサポートされます。QEMU 完全 RELRO が最善の選択肢です。これにより、グローバルオフセットテーブルが読み込み専用になり、出力実行ファイルのプログラムデータセクションの前にさまざまな内部データ部分が置かれます。

- スタックカナリア

バッファーオーバーフロー攻撃を防ぐ役に立てるために、スタックに値を置き、それらの存在を検証します。

- Never eXecute (NX)

Data Execution Prevention (DEP) としても知られています。実行ファイルのデータ部分を必ず実行できなくします。

- Position Independent Executable (PIE)

位置に依存しない実行ファイルを生成します。ASLR のために必要です。

- Address Space Layout Randomization (ASLR)

コード領域とデータ領域の配置を確実にランダム化します。実行ファイルが PIE を用いてビルドされるとき、カーネルにより有効化されます (最近の Linux カーネルはすべて ASLR をサポートします)。

以下のコンパイラーオプションは、QEMU コンパイル時の GCC の推奨です。

CFLAGS="-arch x86_64 -fstack-protector-all -Wstack-protector \

--param ssp-buffer-size=4 -pie -fPIE -ftrapv -D_FORTIFY_SOURCE=2 -O2 \

-Wl,-z,relro,-z,now"

コンパイラーが確実に適切なセキュリティ強化を動作させるようコンパイルした後で、お使いの QEMU 実行ファイルをテストすることを推奨します。

Most cloud deployments will not build software, such as QEMU, by hand. It is better to use packaging to ensure that the process is repeatable and to ensure that the end result can be easily deployed throughout the cloud. The references below provide some additional details on applying compiler hardening options to existing packages.

- DEB パッケージ:

- RPM パッケージ:

Secure Encrypted Virtualization¶

Secure Encrypted Virtualization (SEV) is a technology from AMD which enables the memory for a VM to be encrypted with a key unique to the VM. SEV is available in the Train release as a technical preview with KVM guests on certain AMD-based machines for the purpose of evaluating the technology.

The KVM hypervisor section of the nova configuration guide contains information needed to configure the machine and hypervisor, and lists several limitations of SEV.

SEV provides protection for data in the memory used by the running VM.

However, while the first phase of SEV integration with OpenStack enables

encrypted memory for VMs, importantly it does not provide the

LAUNCH_MEASURE or LAUNCH_SECRET capabilities which are available with

the SEV firmware. This means that data used by an SEV-protected VM may be

subject to attacks from a motivated adversary who has control of the

hypervisor. For example, a rogue administrator on the hypervisor machine could

provide a VM image for tenants with a backdoor and spyware capable of stealing

secrets, or replace the VNC server process to snoop data sent to or from the

VM console including passwords which unlock full disk encryption solutions.

To reduce the chances for a rogue administrator to gain unauthorized access to data, the following security practices should accompany the use of SEV:

A full disk encryption solution should be used by the VM.

A bootloader password should be used on the VM.

In addition, standard security best practices should be used with the VM including the following:

The VM should be well maintained, including regular security scanning and patching to ensure a continuously strong security posture for the VM.

Connections to the VM should use encrypted and authenticated protocols such as HTTPS and SSH.

Additional security tools and processes should be considered and used for the VM appropriate to the level of sensitivity of the data.

強制アクセス制御¶

Compiler hardening makes it more difficult to attack the QEMU process. However, if an attacker does succeed, you want to limit the impact of the attack. Mandatory access controls accomplish this by restricting the privileges on QEMU process to only what is needed. This can be accomplished by using sVirt, SELinux, or AppArmor. When using sVirt, SELinux is configured to run each QEMU process under a separate security context. AppArmor can be configured to provide similar functionality. We provide more details on sVirt and instance isolation in the section below sVirt: SELinux と 仮想化.

Specific SELinux policies are available for many OpenStack services. CentOS

users can review these policies by installing the selinux-policy source

package. The most up to date policies appear in Fedora's selinux-policy

repository. The rawhide-contrib branch has files that end in .te, such

as cinder.te, that can be used on systems running SELinux.

AppArmor profiles for OpenStack services do not currently exist, but the OpenStack-Ansible project handles this by applying AppArmor profiles to each container that runs an OpenStack service.

sVirt: SELinux と 仮想化¶

KVM は複数のテナントを分離する基本技術を提供します。カーネルレベルの独特のアーキテクチャーを用いて、National Security Agency (NSA) により開発されたセキュリティ機構です。開発の起源は 2002 年までさかのぼり、Secure Virtualization (sVirt) 技術は最近の仮想化向けの SELinux の応用技術です。SELinux は、ラベルに基づいた分離制御を適用するために設計され、仮想マシンのプロセス、デバイス、データファイル、それらの上で動作するシステムプロセス間の分離を提供するために拡張されました。

OpenStack の sVirt 実装は、2 種類の主要な脅威ベクターに対して、ハイパーバイザーホストと仮想マシンを保護することを目指しています。

- ハイパーバイザーの脅威

A compromised application running within a virtual machine attacks the hypervisor to access underlying resources. For example, when a virtual machine is able to access the hypervisor OS, physical devices, or other applications. This threat vector represents considerable risk as a compromise on a hypervisor can infect the physical hardware as well as exposing other virtual machines and network segments.

- 仮想マシン (マルチテナント) の脅威

仮想マシンの中で動作している侵入されたアプリケーションは、他の仮想マシンとそのリソースにアクセスし、制御するためにハイパーバイザーを攻撃します。仮想マシンのイメージファイルの集合が単一のアプリケーションにある脆弱性のために侵入されうるため、これは仮想化に特有の脅威ベクターであり、考慮すべきリスクを表します。実ネットワークを保護するための管理技術が仮想マシン環境にそのまま適用できないため、この仮想ネットワークへの攻撃はおもな関心事です。

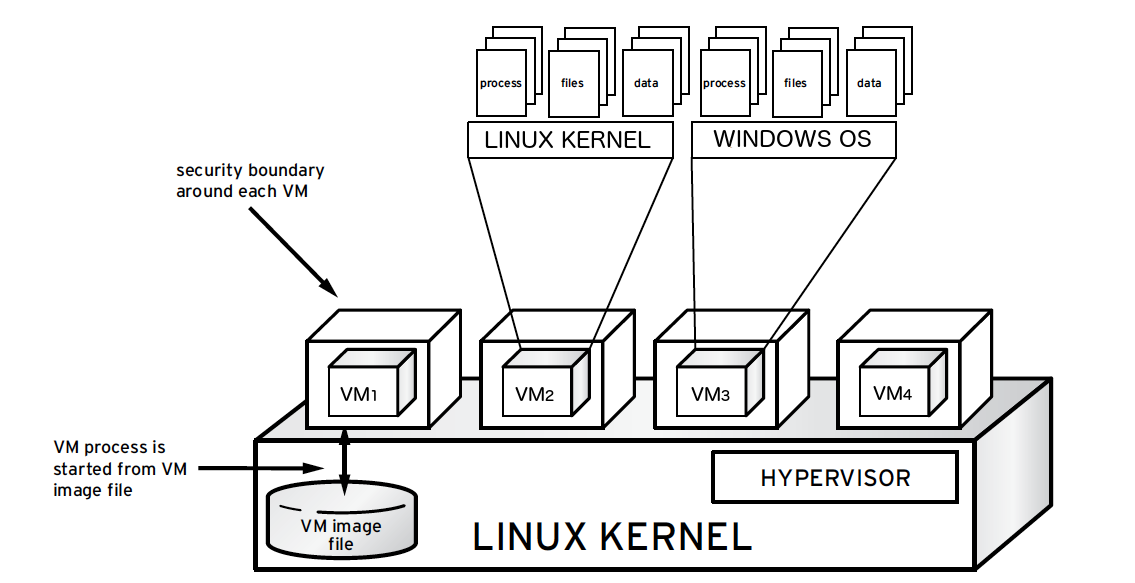

Each KVM-based virtual machine is a process which is labeled by SELinux, effectively establishing a security boundary around each virtual machine. This security boundary is monitored and enforced by the Linux kernel, restricting the virtual machine's access to resources outside of its boundary, such as host machine data files or other VMs.

sVirt isolation is provided regardless of the guest operating system running inside the virtual machine. Linux or Windows VMs can be used. Additionally, many Linux distributions provide SELinux within the operating system, allowing the virtual machine to protect internal virtual resources from threats.

ラベルとカテゴリ¶

KVM-based virtual machine instances are labelled with their own SELinux data

type, known as svirt_image_t. Kernel level protections prevent unauthorized

system processes, such as malware, from manipulating the virtual machine image

files on disk. When virtual machines are powered off, images are stored as

svirt_image_t as shown below:

system_u:object_r:svirt_image_t:SystemLow image1

system_u:object_r:svirt_image_t:SystemLow image2

system_u:object_r:svirt_image_t:SystemLow image3

system_u:object_r:svirt_image_t:SystemLow image4

The svirt_image_t label uniquely identifies image files on disk, allowing

for the SELinux policy to restrict access. When a KVM-based compute image is

powered on, sVirt appends a random numerical identifier to the image. sVirt is

capable of assigning numeric identifiers to a maximum of 524,288 virtual

machines per hypervisor node, however most OpenStack deployments are highly

unlikely to encounter this limitation.

この例は sVirt カテゴリー識別子を示します。

system_u:object_r:svirt_image_t:s0:c87,c520 image1

system_u:object_r:svirt_image_t:s0:419,c172 image2

SELinux ユーザーとロール¶

SELinux manages user roles. These can be viewed through the -Z flag,

or with the semanage command. On the hypervisor, only administrators

should be able to access the system, and should have an appropriate context

around both the administrative users and any other users that are on the

system. For more information, see the SELinux users documentation.

ブーリアン¶

SELinux の管理負担を減らすために、多くのエンタープライズ Linux プラットフォームは sVirt のセキュリティ設定を簡単に変更するために、SELinux ブーリアンを利用します。

Red Hat Enterprise Linux ベースの KVM 環境は以下の sVirt ブーリアンを利用します。

sVirt SELinux ブーリアン |

記述 |

|---|---|

virt_use_common |

Allow virt to use serial or parallel communication ports. |

virt_use_fusefs |

仮想化が FUSE マウントされたファイルを読み取ることを許可します。 |

virt_use_nfs |

仮想化が NFS マウントされたファイルを管理することを許可します。 |

virt_use_samba |

仮想化が CIFS マウントされたファイルを管理することを許可します。 |

virt_use_sanlock |

制限された仮想マシンが sanlock を操作することを許可します。 |

virt_use_sysfs |

仮想マシンがデバイス設定 (PCI) を管理することを許可します。 |

virt_use_usb |

仮想化が USB デバイスを使用することを許可します。 |

virt_use_xserver |

仮想マシンが X Window System と通信することを許可します。 |