[ English | 한국어 (대한민국) | English (United Kingdom) | Indonesia | français | русский | Deutsch ]

Grup jaringan penyedia¶

Banyak contoh konfigurasi jaringan mengasumsikan lingkungan yang homogen, di mana setiap server dikonfigurasi secara identik dan nama antarmuka dan antarmuka jaringan yang konsisten dapat diasumsikan di semua host.

Perubahan terbaru pada OSA memungkinkan pengerahkan untuk menentukan jaringan penyedia yang berlaku untuk kelompok inventaris tertentu dan memungkinkan untuk konfigurasi jaringan yang heterogen dalam lingkungan cloud. Grup baru dapat dibuat atau grup inventaris yang ada, seperti network_hosts atau `` compute_hosts``, dapat digunakan untuk memastikan konfigurasi tertentu hanya diterapkan pada host yang memenuhi parameter yang diberikan.

Sebelum membaca dokumen ini, silakan tinjau skenario berikut:

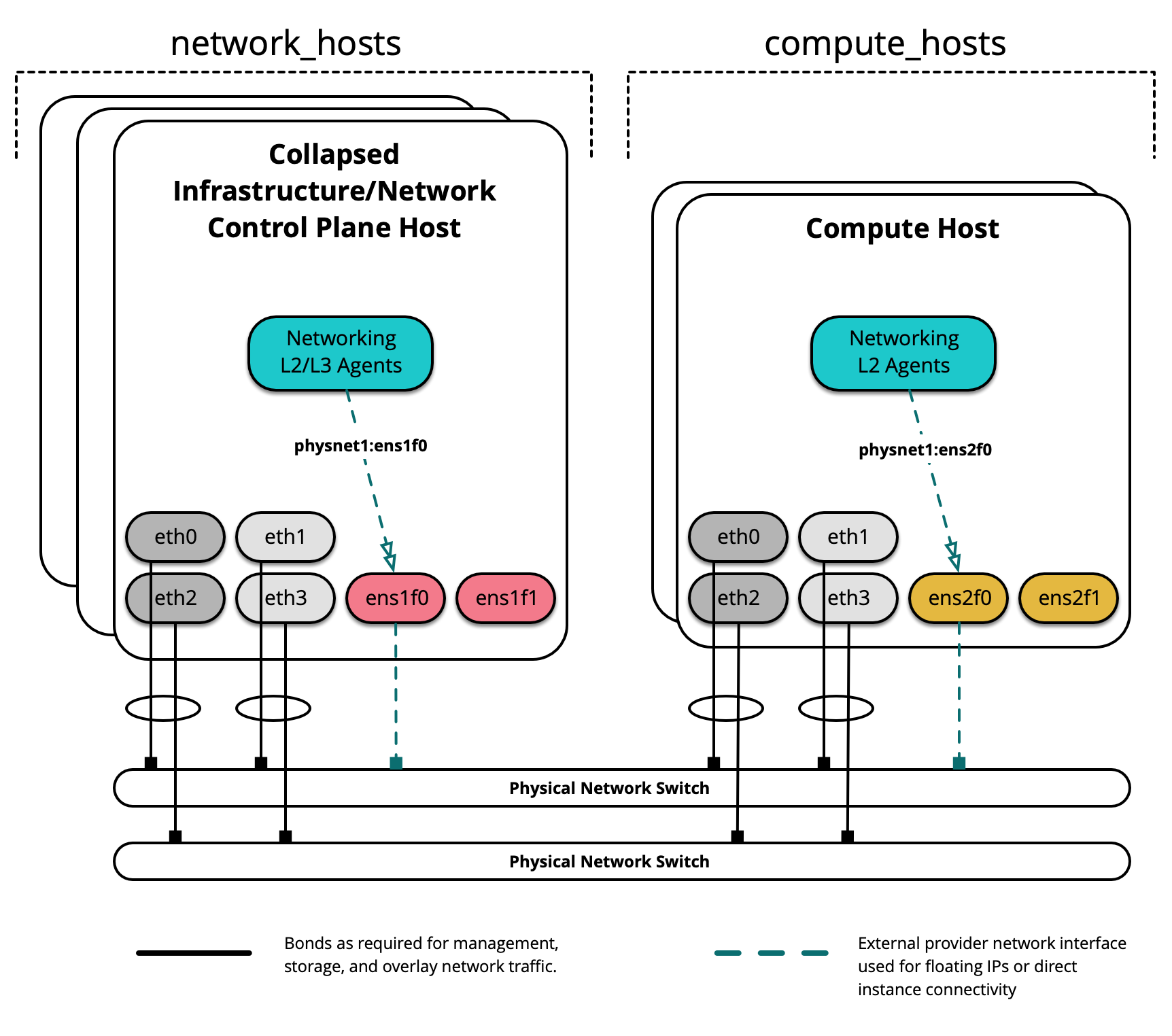

Lingkungan contoh ini memiliki karakteristik sebagai berikut:

Grup

network_hostsyang terdiri dari tiga host infrastructure/network (control plane) yang diciutkanGrup

compute_hostsyang terdiri dari dua host komputasiMultiple Network Interface Cards (NIC) digunakan sebagai antarmuka jaringan penyedia yang bervariasi antar host

Catatan

Grup network_hosts dan compute_hosts adalah grup yang sudah ditentukan sebelumnya dalam penyebaran OpenStack-Ansible.

Diagram berikut menunjukkan server dengan nama antarmuka jaringan yang berbeda:

Dalam contoh lingkungan ini, infrastructure/network node hosting agen L2/L3/DHCP akan menggunakan antarmuka bernama ens1f0 untuk jaringan penyedia physnet1. Compute nodes, di sisi lain, akan menggunakan antarmuka bernama ens2f0 untuk jaringan penyedia physnet1 yang sama.

Catatan

Perbedaan nama antarmuka jaringan mungkin merupakan akibat dari perbedaan dalam driver dan/atau lokasi slot PCI.

Konfigurasi penempatan (deployment)¶

Tata letak lingkungan¶

File /etc/openstack_deploy/openstack_user_config.yml mendefinisikan tata letak lingkungan.

Konfigurasi berikut menjelaskan tata letak untuk lingkungan ini.

---

cidr_networks:

container: 172.29.236.0/22

tunnel: 172.29.240.0/22

storage: 172.29.244.0/22

used_ips:

- "172.29.236.1,172.29.236.50"

- "172.29.240.1,172.29.240.50"

- "172.29.244.1,172.29.244.50"

- "172.29.248.1,172.29.248.50"

global_overrides:

internal_lb_vip_address: 172.29.236.9

#

# The below domain name must resolve to an IP address

# in the CIDR specified in haproxy_keepalived_external_vip_cidr.

# If using different protocols (https/http) for the public/internal

# endpoints the two addresses must be different.

#

external_lb_vip_address: openstack.example.com

management_bridge: "br-mgmt"

provider_networks:

- network:

container_bridge: "br-mgmt"

container_type: "veth"

container_interface: "eth1"

ip_from_q: "container"

type: "raw"

group_binds:

- all_containers

- hosts

is_container_address: true

#

# The below provider network defines details related to vxlan traffic,

# including the range of VNIs to assign to project/tenant networks and

# other attributes.

#

# The network details will be used to populate the respective network

# configuration file(s) on the members of the listed groups.

#

- network:

container_bridge: "br-vxlan"

container_type: "veth"

container_interface: "eth10"

ip_from_q: "tunnel"

type: "vxlan"

range: "1:1000"

net_name: "vxlan"

group_binds:

- network_hosts

- compute_hosts

#

# The below provider network(s) define details related to a given provider

# network: physnet1. Details include the name of the veth interface to

# connect to the bridge when agent on_metal is False (container_interface)

# or the physical interface to connect to the bridge when agent on_metal

# is True (host_bind_override), as well as the network type. The provider

# network name (net_name) will be used to build a physical network mapping

# to a network interface; either container_interface or host_bind_override

# (when defined).

#

# The network details will be used to populate the respective network

# configuration file(s) on the members of the listed groups. In this

# example, host_bind_override specifies the ens1f0 interface and applies

# only to the members of network_hosts:

#

- network:

container_bridge: "br-vlan"

container_type: "veth"

container_interface: "eth12"

host_bind_override: "ens1f0"

type: "flat"

net_name: "physnet1"

group_binds:

- network_hosts

- network:

container_bridge: "br-vlan"

container_type: "veth"

container_interface: "eth11"

host_bind_override: "ens1f0"

type: "vlan"

range: "101:200,301:400"

net_name: "physnet1"

group_binds:

- network_hosts

#

# The below provider network(s) also define details related to the

# physnet1 provider network. In this example, however, host_bind_override

# specifies the ens2f0 interface and applies only to the members of

# compute_hosts:

#

- network:

container_bridge: "br-vlan"

container_type: "veth"

container_interface: "eth12"

host_bind_override: "ens2f0"

type: "flat"

net_name: "physnet1"

group_binds:

- compute_hosts

- network:

container_bridge: "br-vlan"

container_type: "veth"

container_interface: "eth11"

host_bind_override: "ens2f0"

type: "vlan"

range: "101:200,301:400"

net_name: "physnet1"

group_binds:

- compute_hosts

#

# The below provider network defines details related to storage traffic.

#

- network:

container_bridge: "br-storage"

container_type: "veth"

container_interface: "eth2"

ip_from_q: "storage"

type: "raw"

group_binds:

- glance_api

- cinder_api

- cinder_volume

- nova_compute

###

### Infrastructure

###

# galera, memcache, rabbitmq, utility

shared-infra_hosts:

infra1:

ip: 172.29.236.11

container_vars:

# Optional | Example setting the container_tech for a target host.

container_tech: lxc

infra2:

ip: 172.29.236.12

container_vars:

# Optional | Example setting the container_tech for a target host.

container_tech: nspawn

infra3:

ip: 172.29.236.13

# repository (apt cache, python packages, etc)

repo-infra_hosts:

infra1:

ip: 172.29.236.11

infra2:

ip: 172.29.236.12

infra3:

ip: 172.29.236.13

# load balancer

# Ideally the load balancer should not use the Infrastructure hosts.

# Dedicated hardware is best for improved performance and security.

haproxy_hosts:

infra1:

ip: 172.29.236.11

infra2:

ip: 172.29.236.12

infra3:

ip: 172.29.236.13

# rsyslog server

log_hosts:

log1:

ip: 172.29.236.14

###

### OpenStack

###

# keystone

identity_hosts:

infra1:

ip: 172.29.236.11

infra2:

ip: 172.29.236.12

infra3:

ip: 172.29.236.13

# cinder api services

storage-infra_hosts:

infra1:

ip: 172.29.236.11

infra2:

ip: 172.29.236.12

infra3:

ip: 172.29.236.13

# glance

# The settings here are repeated for each infra host.

# They could instead be applied as global settings in

# user_variables, but are left here to illustrate that

# each container could have different storage targets.

image_hosts:

infra1:

ip: 172.29.236.11

container_vars:

limit_container_types: glance

glance_nfs_client:

- server: "172.29.244.15"

remote_path: "/images"

local_path: "/var/lib/glance/images"

type: "nfs"

options: "_netdev,auto"

infra2:

ip: 172.29.236.12

container_vars:

limit_container_types: glance

glance_nfs_client:

- server: "172.29.244.15"

remote_path: "/images"

local_path: "/var/lib/glance/images"

type: "nfs"

options: "_netdev,auto"

infra3:

ip: 172.29.236.13

container_vars:

limit_container_types: glance

glance_nfs_client:

- server: "172.29.244.15"

remote_path: "/images"

local_path: "/var/lib/glance/images"

type: "nfs"

options: "_netdev,auto"

# placement

placement-infra_hosts:

infra1:

ip: 172.29.236.11

infra2:

ip: 172.29.236.12

infra3:

ip: 172.29.236.13

# nova api, conductor, etc services

compute-infra_hosts:

infra1:

ip: 172.29.236.11

infra2:

ip: 172.29.236.12

infra3:

ip: 172.29.236.13

# heat

orchestration_hosts:

infra1:

ip: 172.29.236.11

infra2:

ip: 172.29.236.12

infra3:

ip: 172.29.236.13

# horizon

dashboard_hosts:

infra1:

ip: 172.29.236.11

infra2:

ip: 172.29.236.12

infra3:

ip: 172.29.236.13

# neutron server, agents (L3, etc)

network_hosts:

infra1:

ip: 172.29.236.11

infra2:

ip: 172.29.236.12

infra3:

ip: 172.29.236.13

# ceilometer (telemetry data collection)

metering-infra_hosts:

infra1:

ip: 172.29.236.11

infra2:

ip: 172.29.236.12

infra3:

ip: 172.29.236.13

# aodh (telemetry alarm service)

metering-alarm_hosts:

infra1:

ip: 172.29.236.11

infra2:

ip: 172.29.236.12

infra3:

ip: 172.29.236.13

# gnocchi (telemetry metrics storage)

metrics_hosts:

infra1:

ip: 172.29.236.11

infra2:

ip: 172.29.236.12

infra3:

ip: 172.29.236.13

# nova hypervisors

compute_hosts:

compute1:

ip: 172.29.236.16

compute2:

ip: 172.29.236.17

# ceilometer compute agent (telemetry data collection)

metering-compute_hosts:

compute1:

ip: 172.29.236.16

compute2:

ip: 172.29.236.17

# cinder volume hosts (NFS-backed)

# The settings here are repeated for each infra host.

# They could instead be applied as global settings in

# user_variables, but are left here to illustrate that

# each container could have different storage targets.

storage_hosts:

infra1:

ip: 172.29.236.11

container_vars:

cinder_backends:

limit_container_types: cinder_volume

nfs_volume:

volume_backend_name: NFS_VOLUME1

volume_driver: cinder.volume.drivers.nfs.NfsDriver

nfs_mount_options: "rsize=65535,wsize=65535,timeo=1200,actimeo=120"

nfs_shares_config: /etc/cinder/nfs_shares

shares:

- ip: "172.29.244.15"

share: "/vol/cinder"

infra2:

ip: 172.29.236.12

container_vars:

cinder_backends:

limit_container_types: cinder_volume

nfs_volume:

volume_backend_name: NFS_VOLUME1

volume_driver: cinder.volume.drivers.nfs.NfsDriver

nfs_mount_options: "rsize=65535,wsize=65535,timeo=1200,actimeo=120"

nfs_shares_config: /etc/cinder/nfs_shares

shares:

- ip: "172.29.244.15"

share: "/vol/cinder"

infra3:

ip: 172.29.236.13

container_vars:

cinder_backends:

limit_container_types: cinder_volume

nfs_volume:

volume_backend_name: NFS_VOLUME1

volume_driver: cinder.volume.drivers.nfs.NfsDriver

nfs_mount_options: "rsize=65535,wsize=65535,timeo=1200,actimeo=120"

nfs_shares_config: /etc/cinder/nfs_shares

shares:

- ip: "172.29.244.15"

share: "/vol/cinder"

Host di grup network_hosts akan memetakan physnet1 ke antarmuka ens1f0, sementara host di grup compute_hosts akan memetakan physnet1 ke antarmuka ens2f0 . Pemetaan penyedia tambahan dapat dibuat menggunakan format yang sama dalam definisi yang terpisah.

Definisi antarmuka penyedia tambahan bernama physnet2 menggunakan antarmuka yang berbeda antar host dapat menyerupai yang berikut:

- network:

container_bridge: "br-vlan2"

container_type: "veth"

container_interface: "eth13"

host_bind_override: "ens1f1"

type: "vlan"

range: "2000:2999"

net_name: "physnet2"

group_binds:

- network_hosts

- network:

container_bridge: "br-vlan2"

container_type: "veth"

host_bind_override: "ens2f1"

type: "vlan"

range: "2000:2999"

net_name: "physnet2"

group_binds:

- compute_hosts

Catatan

Parameter container_interface hanya diperlukan ketika agen Neutron dijalankan dalam kontainer, dan dapat dikecualikan dalam banyak kasus. Parameter container_bridge dan container_type juga terkait dengan kontainer infrastruktur, tetapi harus tetap ditentukan untuk tujuan legacy.

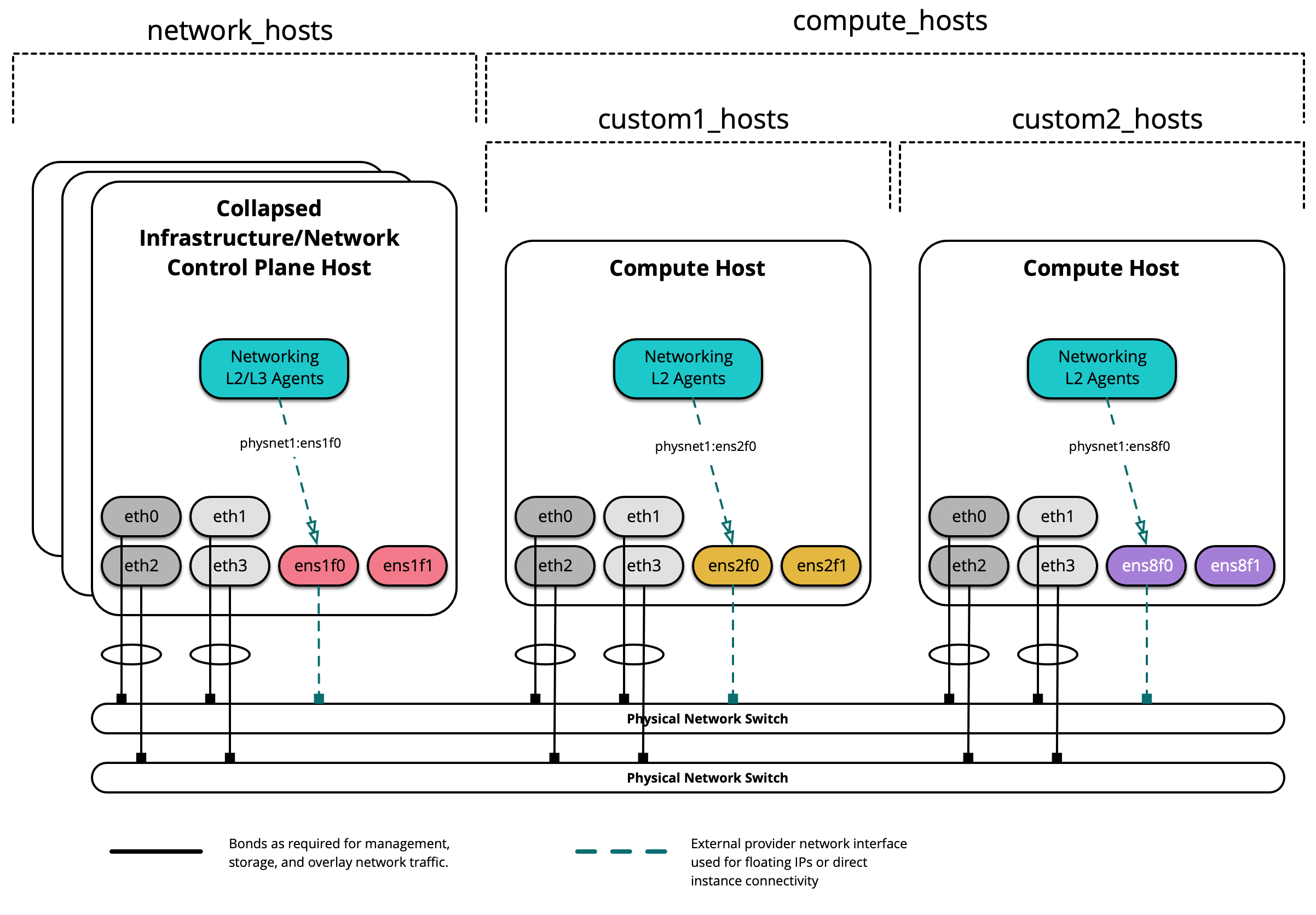

Grup Kustom¶

Grup inventaris kustom dapat dibuat untuk membantu mensegmentasi host di luar grup built-in yang disediakan oleh OpenStack-Ansible.

Sebelum membuat grup kustom, harap tinjau yang berikut:

Diagram berikut menunjukkan bagaimana grup kustom dapat digunakan untuk host segmen lebih lanjut:

Saat membuat grup kustom, pertama buat kerangka (skeleton) di /etc/openstack_deploy/env.d/. Berikut ini adalah contoh inventory skeleton untuk grup bernama custom2_hosts yang akan terdiri dari host bare metal, dan telah dibuat di /etc/openstack_deploy/env.d/custom2_hosts.yml.

---

physical_skel:

custom2_containers:

belongs_to:

- all_containers

custom2_hosts:

belongs_to:

- hosts

Tentukan grup dan anggotanya dalam file yang sesuai di /etc/openstack_deploy/conf.d/. Berikut ini adalah contoh grup yang bernama custom2_hosts didefinisikan dalam /etc/openstack_deploy/conf.d/custom2_hosts.yml terdiri dari satu anggota, compute2:

---

# custom example

custom2_hosts:

compute2:

ip: 172.29.236.17

Grup kustom kemudian dapat ditentukan saat membuat jaringan penyedia, seperti yang ditunjukkan di sini:

- network:

container_bridge: "br-vlan"

container_type: "veth"

host_bind_override: "ens8f1"

type: "vlan"

range: "101:200,301:400"

net_name: "physnet1"

group_binds:

- custom2_hosts